2. Introduction to Machine Learning#

In this chapter, we’ll briefly review machine learning concepts that will be relevant later. We’ll focus in particular on the problem of prediction, that is, to model some output variable as a function of observed input covariates.

# importing the packages

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import random

import math

import warnings

from sklearn.metrics import mean_squared_error

from SyncRNG import SyncRNG

warnings.filterwarnings('ignore')

%matplotlib inline

In this section, we will use simulated data. In the next section we’ll load a real dataset.

# Simulating data

# Sample size

n = 500

# Generating covariate X ~ Unif[-4, 4]

x = np.linspace(-4,4, n) #with linspace we can generate a vector of "n" numbers between a range of numbers

random.shuffle(x)

mu = np.where(x<0, np.cos(2*x), 1 - np.sin(x) )

y = mu + 1*np.random.normal(size =n)

# collecting observations in a data.frame object

data = pd.DataFrame(np.array([x,y]).T, columns=['x','y'])

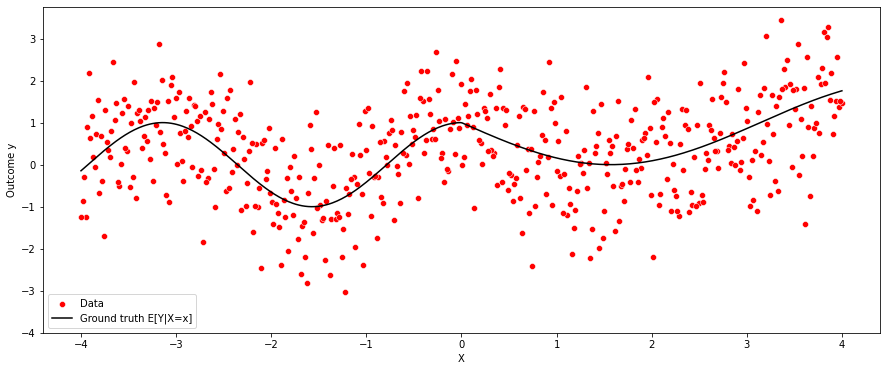

The following shows how the two variables x and y relate. Note that the relationship is nonlinear.

plt.figure(figsize=(15,6))

sns.scatterplot(x,y, color = 'red', label = 'Data')

sns.lineplot(x,mu, color = 'black', label = "Ground truth E[Y|X=x]")

plt.yticks(np.arange(-4,4,1))

plt.legend()

plt.xlabel("X")

plt.ylabel("Outcome y")

Text(0, 0.5, 'Outcome y')

Note: If you’d like to run the code below on a different dataset, you can replace the dataset above with another data.frame of your choice, and redefine the key variable identifiers (outcome, covariates) accordingly. Although we try to make the code as general as possible, you may also need to make a few minor changes to the code below; read the comments carefully.

2.1. Key concepts#

The prediction problem is to accurately guess the value of some output variable \(Y_i\) from input variables \(X_i\). For example, we might want to predict “house prices given house characteristics such as the number of rooms, age of the building, and so on. The relationship between input and output is modeled in very general terms by some function

where \(\epsilon_i\) represents all that is not captured by information obtained from \(X_i\) via the mapping \(f\). We say that error \(\epsilon_i\) is irreducible.

We highlight that (2.1) is not modeling a causal relationship between inputs and outputs. For an extreme example, consider taking \(Y_i\) to be “distance from the equator” and \(X_i\) to be “average temperature.” We can still think of the problem of guessing (“predicting”) “distance from the equator” given some information about “average temperature,” even though one would expect the former to cause the latter.

In general, we can’t know the “ground truth” \(f\), so we will approximate it from data. Given \(n\) data points \(\{(X_1, Y_1), \cdots, (X_n, Y_n)\}\), our goal is to obtain an estimated model \(\hat{f}\) such that our predictions \(\widehat{Y}_i := \hat{f}(X_i)\) are “close” to the true outcome values \(Y_i\) given some criterion. To formalize this, we’ll follow these three steps:

Modeling: Decide on some suitable class of functions that our estimated model may belong to. In machine learning applications the class of functions can be very large and complex (e.g., deep decision trees, forests, high-dimensional linear models, etc). Also, we must decide on a loss function that serves as our criterion to evaluate the quality of our predictions (e.g., mean-squared error).

Fitting: Find the estimate \(\hat{f}\) that optimizes the loss function chosen in the previous step (e.g., the tree that minimizes the squared deviation between \(\hat{f}(X_i)\) and \(Y_i\) in our data).

Evaluation: Evaluate our fitted model \(\hat{f}\). That is, if we were given a new, yet unseen, input and output pair \((X',Y')\), we’d like to know if \(Y' \approx \hat{f}(X_i)\) by some metric.

For concreteness, let’s work through an example. Let’s say that, given the data simulated above, we’d like to predict \(Y_i\) from the first covariate \(X_{i1}\) only. Also, let’s say that our model class will be polynomials of degree \(q\) in \(X_{i1}\), and we’ll evaluate fit based on mean squared error. That is, \(\hat{f}(X_{i1}) = \hat{b}_0 + X_{i1}\hat{b}_1 + \cdots + X_{i1}^q \hat{b}_q\), where the coefficients are obtained by solving the following problem:

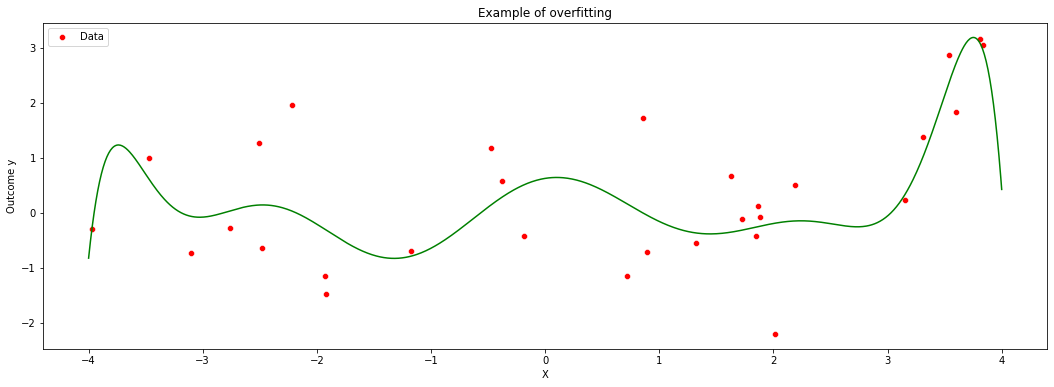

An important question is what is \(q\), the degree of the polynomial. It controls the complexity of the model. One may imagine that more complex models are better, but that is not always true, because a very flexible model may try to simply interpolate over the data at hand, but fail to generalize well for new data points. We call this overfitting. The main feature of overfitting is high variance, in the sense that, if we were given a different data set of the same size, we’d likely get a very different model.

To illustrate, in the figure below we let the degree be \(q=10\) but use only the first few data points. The fitted model is shown in green, and the original data points are in red.

X = data.loc[:,'x'].values.reshape(-1, 1)

Y = data.loc[:,'y'].values.reshape(-1, 1)

# Note: this code assumes that the first covariate is continuous.

# Fitting a flexible model on very little data

# selecting only a few data points

subset = np.arange(0,30)

from sklearn.metrics import mean_squared_error

from sklearn.linear_model import LinearRegression

from sklearn.preprocessing import PolynomialFeatures

from sklearn.model_selection import train_test_split

poly = PolynomialFeatures(degree = 10)

X_poly = poly.fit_transform(X)

poly.fit(X_poly, Y)

lin2 = LinearRegression()

lin2.fit(X_poly[0:30], Y[0:30])

x = data['x']

xgrid = np.linspace(min(x),max(x), 1000)

new_data = pd.DataFrame(xgrid, columns=['x'])

yhat = lin2.predict(poly.fit_transform(new_data))

# Visualising the Polynomial Regression results

plt.figure(figsize=(18,6))

sns.scatterplot(data.loc[subset,'x'],data.loc[subset,'y'], color = 'red', label = 'Data')

plt.plot(xgrid, yhat, color = 'green', label = 'Estimate')

plt.title('Example of overfitting')

plt.xlabel('X')

plt.ylabel('Outcome y')

Text(0, 0.5, 'Outcome y')

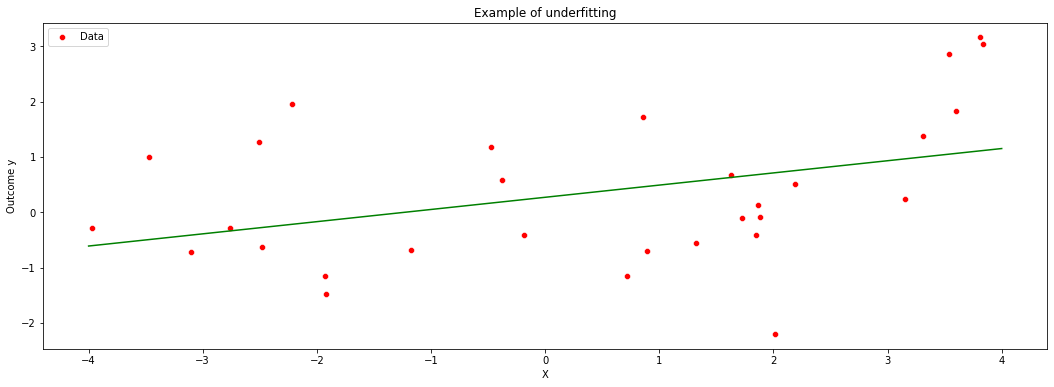

On the other hand, when \(q\) is too small relative to our data, we permit only very simple models and may suffer from misspecification bias. We call this underfitting. The main feature of underfitting is high bias – the selected model just isn’t complex enough to accurately capture the relationship between input and output variables.

To illustrate underfitting, in the figure below we set \(q=1\) (a linear fit).

lin = LinearRegression()

lin.fit(X[0:30], Y[0:30])

x = data['x']

xgrid = np.linspace(min(x),max(x), 1000)

new_data = pd.DataFrame(xgrid, columns=['x'])

yhat = lin.predict(new_data)

plt.figure(figsize=(18,6))

sns.scatterplot(data.loc[subset,'x'],data.loc[subset,'y'], color = 'red', label = 'Data')

plt.plot(xgrid, yhat, color = 'green',label = 'Estimate')

plt.title('Example of underfitting')

plt.xlabel('X')

plt.ylabel('Outcome y')

Text(0, 0.5, 'Outcome y')

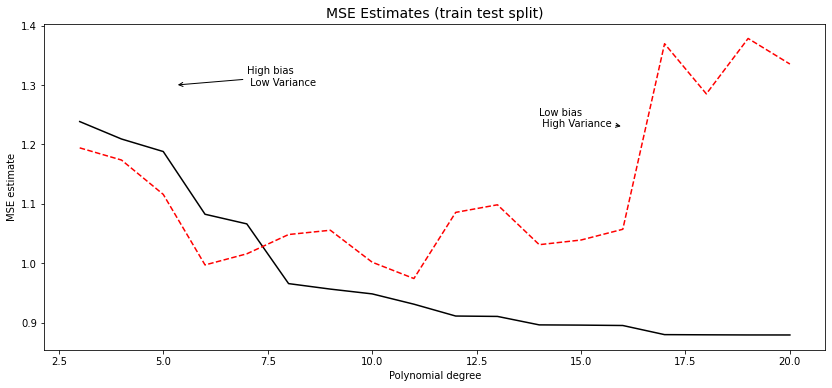

This tension is called the bias-variance trade-off: simpler models underfit and have more bias, more complex models overfit and have more variance.

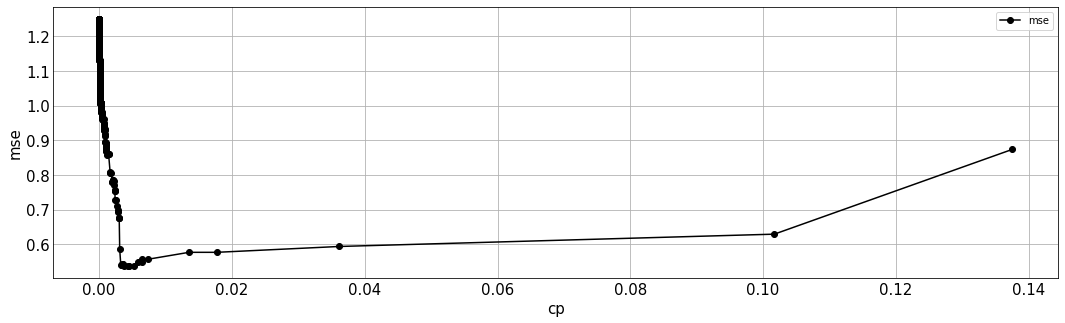

One data-driven way of deciding an appropriate level of complexity is to divide the available data into a training set (where the model is fit) and the validation set (where the model is evaluated). The next snippet of code uses the first half of the data to fit a polynomial of order \(q\), and then evaluates that polynomial on the second half. The training MSE estimate decreases monotonically with the polynomial degree, because the model is better able to fit on the training data; the test MSE estimate starts increasing after a while reflecting that the model no longer generalizes well.

degrees =np.arange(3,21)

train_mse =[]

test_mse =[]

for d in degrees:

poly =PolynomialFeatures(degree = d, include_bias =False )

poly_features = poly.fit_transform(X)

X_train, X_test, y_train, y_test = train_test_split(poly_features,y, train_size=0.5 , random_state= 0)

# Now since we want the valid and test size to be equal (10% each of overall data).

# we have to define valid_size=0.5 (that is 50% of remaining data)

poly_reg_model = LinearRegression()

poly_reg_model.fit(X_train, y_train)

y_train_pred = poly_reg_model.predict(X_train)

y_test_pred = poly_reg_model.predict(X_test)

mse_train= mean_squared_error(y_train, y_train_pred)

mse_test= mean_squared_error(y_test, y_test_pred)

train_mse.append(mse_train)

test_mse.append(mse_test)

fig, ax = plt.subplots(figsize=(14,6))

ax.plot(degrees, train_mse,color ="black", label = "Training")

ax.plot(degrees, test_mse,"r--", label = "Validation")

ax.set_title("MSE Estimates (train test split)", fontsize =14)

ax.set(xlabel = "Polynomial degree", ylabel = "MSE estimate")

ax.annotate("Low bias \n High Variance", xy=(16, 1.23), xycoords='data', xytext=(14, 1.23), textcoords='data',

arrowprops=dict(arrowstyle="->",connectionstyle="arc3"),)

ax.annotate("High bias \n Low Variance", xy=(5.3, 1.30), xycoords='data', xytext=(7, 1.30), textcoords='data',

arrowprops=dict(arrowstyle="->",connectionstyle="arc3"),)

Text(7, 1.3, 'High bias \n Low Variance')

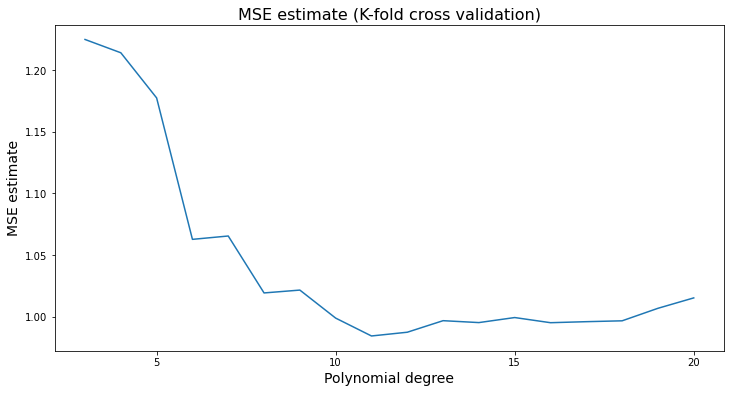

To make better use of the data we will often divide the data into \(K\) subsets, or folds. Then one fits \(K\) models, each using \(K-1\) folds and then evaluation the fitted model on the remaining fold. This is called k-fold cross-validation.

from sklearn.model_selection import GridSearchCV

from sklearn.metrics import make_scorer

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import KFold

#cv = KFold(n_splits=10, random_state=1, shuffle=True)

scorer = make_scorer

mse =[]

for d in degrees:

poly =PolynomialFeatures(degree = d, include_bias =False )

poly_features = poly.fit_transform(X)

ols = LinearRegression()

scorer = make_scorer(mean_squared_error)

mse_test= cross_val_score(ols, poly_features, y, scoring=scorer, cv =5).mean()

mse.append(mse_test)

plt.figure(figsize=(12,6))

plt.plot(degrees, mse)

plt.xlabel('Polynomial degree', fontsize = 14)

plt.xticks(np.arange(5,21,5))

plt.ylabel('MSE estimate', fontsize = 14)

plt.title('MSE estimate (K-fold cross validation)', fontsize =16)

#different to r, the models in python got a better performance with more training cause by the

#cross validation and the kfold

Text(0.5, 1.0, 'MSE estimate (K-fold cross validation)')

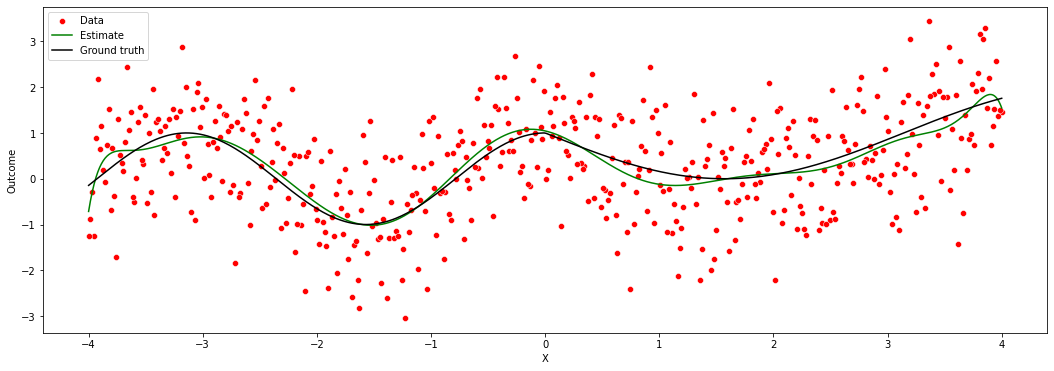

A final remark is that, in machine learning applications, the complexity of the model often is allowed to increase with the available data. In the example above, even though we weren’t very successful when fitting a high-dimensional model on very little data, if we had much more data perhaps such a model would be appropriate. The next figure again fits a high order polynomial model, but this time on many data points. Note how, at least in data-rich regions, the model is much better behaved, and tracks the average outcome reasonably well without trying to interpolate wildly of the data points.

X = data.loc[:,'x'].values.reshape(-1, 1)

Y = data.loc[:,'y'].values.reshape(-1, 1)

subset = np.arange(0,500)

from sklearn.linear_model import LinearRegression

from sklearn.preprocessing import PolynomialFeatures

poly = PolynomialFeatures(degree = 15)

X_poly = poly.fit_transform(X)

poly.fit(X_poly, Y)

lin2 = LinearRegression()

lin2.fit(X_poly[0:500], Y[0:500])

x = data['x']

xgrid = np.linspace(min(x),max(x), 1000)

new_data = pd.DataFrame(xgrid, columns=['x'])

yhat = lin2.predict(poly.fit_transform(new_data))

# Visualising the Polynomial Regression results

plt.figure(figsize=(18,6))

sns.scatterplot(data.loc[subset,'x'],data.loc[subset,'y'], color = 'red', label = 'Data')

plt.plot(xgrid, yhat, color = 'green', label = 'Estimate')

sns.lineplot(x,mu, color = 'black', label = "Ground truth")

plt.xlabel('X')

plt.ylabel('Outcome')

Text(0, 0.5, 'Outcome')

This is one of the benefits of using machine learning-based models: more data implies more flexible modeling, and therefore potentially better predictive power – provided that we carefully avoid overfitting.

The example above based on polynomial regression was used mostly for illustration. In practice, there are often better-performing algorithms. We’ll see some of them next.

2.2. Common machine learning algorithms#

Next, we’ll introduce three machine learning algorithms: (regularized) linear models, trees, and forests. Although this isn’t an exhaustive list, these algorithms are common enough that every machine learning practitioner should know about them. They also have convenient R packages that allow for easy coding.

In this tutorial, we’ll focus heavily on how to interpret the output of machine learning models – or, at least, how not to mis-interpret it. However, in this chapter we won’t be making any causal claims about the relationships between variables yet. But please hang tight, as estimating causal effects will be one of the main topics presented in the next chapters.

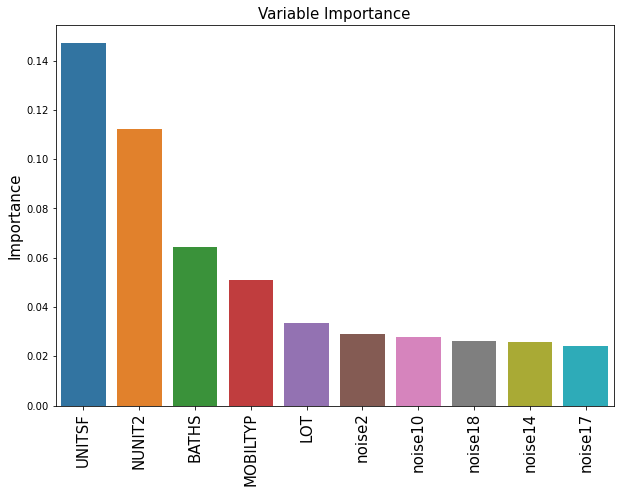

For the remainder of the chapter we will use a real dataset. Each row in this data set represents the characteristics of a owner-occupied housing unit. Our goal is to predict the (log) price of the housing unit (LOGVALUE, our outcome variable) from features such as the size of the lot (LOT) and square feet area (UNITSF), number of bedrooms (BEDRMS) and bathrooms (BATHS), year in which it was built (BUILT) etc. This dataset comes from the American Housing Survey and was used in Mullainathan and Spiess (2017, JEP). In addition, we will append to this data columns that are pure noise. Ideally, our fitted model should not take them into acccount.

import requests

import io

url = 'https://docs.google.com/uc?id=1qHr-6nN7pCbU8JUtbRDtMzUKqS9ZlZcR&export=download'

urlData = requests.get(url).content

data = pd.read_csv(io.StringIO(urlData.decode('utf-8')))

data.drop(['Unnamed: 0'], axis=1, inplace=True)

# outcome variable name

outcome = 'LOGVALUE'

# covariates

true_covariates = ['LOT','UNITSF','BUILT','BATHS','BEDRMS','DINING','METRO','CRACKS','REGION','METRO3','PHONE','KITCHEN','MOBILTYP','WINTEROVEN','WINTERKESP','WINTERELSP','WINTERWOOD','WINTERNONE','NEWC','DISH','WASH','DRY','NUNIT2','BURNER','COOK','OVEN','REFR','DENS','FAMRM','HALFB','KITCH','LIVING','OTHFN','RECRM','CLIMB','ELEV','DIRAC','PORCH','AIRSYS','WELL','WELDUS','STEAM','OARSYS']

p_true = len(true_covariates)

# noise covariates added for didactic reasons

p_noise = 20

noise_covariates = []

for x in range(1, p_noise+1):

noise_covariates.append('noise{0}'.format(x))

covariates = true_covariates + noise_covariates

x_noise = np.random.rand(data.shape[0] * p_noise).reshape(28727,20)

x_noise = pd.DataFrame(x_noise, columns=noise_covariates)

data = pd.concat([data, x_noise], axis=1)

# sample size

n = data.shape[0]

# total number of covariates

p = len(covariates)

Here’s the correlation between the first few covariates. Note how, most variables are positively correlated, which is expected since houses with more bedrooms will usually also have more bathrooms, larger area, etc.

data.loc[:,covariates[0:8]].corr()

| LOT | UNITSF | BUILT | BATHS | BEDRMS | DINING | METRO | CRACKS | |

|---|---|---|---|---|---|---|---|---|

| LOT | 1.000000 | 0.064841 | 0.044639 | 0.057325 | 0.009626 | -0.015348 | 0.136258 | 0.016851 |

| UNITSF | 0.064841 | 1.000000 | 0.143201 | 0.428723 | 0.361165 | 0.214030 | 0.057441 | 0.033548 |

| BUILT | 0.044639 | 0.143201 | 1.000000 | 0.434519 | 0.215109 | 0.037468 | 0.323703 | 0.092390 |

| BATHS | 0.057325 | 0.428723 | 0.434519 | 1.000000 | 0.540230 | 0.259457 | 0.189812 | 0.062819 |

| BEDRMS | 0.009626 | 0.361165 | 0.215109 | 0.540230 | 1.000000 | 0.281846 | 0.121331 | 0.026779 |

| DINING | -0.015348 | 0.214030 | 0.037468 | 0.259457 | 0.281846 | 1.000000 | 0.022026 | 0.021270 |

| METRO | 0.136258 | 0.057441 | 0.323703 | 0.189812 | 0.121331 | 0.022026 | 1.000000 | 0.057545 |

| CRACKS | 0.016851 | 0.033548 | 0.092390 | 0.062819 | 0.026779 | 0.021270 | 0.057545 | 1.000000 |

2.2.1. Generalized linear models#

This class of models extends common methods such as linear and logistic regression by adding a penalty to the magnitude of the coefficients. Lasso penalizes the absolute value of slope coefficients. For regression problems, it becomes

Similarly, in a regression problem Ridge penalizes the sum of squares of the slope coefficients,

Also, there exists the Elastic Net penalization which consists of a convex combination between the other two. In all cases, the scalar parameter \(\lambda\) controls the complexity of the model. For \(\lambda=0\), the problem reduces to the “usual” linear regression. As \(\lambda\) increases, we favor simpler models. As we’ll see below, the optimal parameter \(\lambda\) is selected via cross-validation.

An important feature of Lasso-type penalization is that it promotes sparsity – that is, it forces many coefficients to be exactly zero. This is different from Ridge-type penalization, which forces coefficients to be small.

Another interesting property of these models is that, even though they are called “linear” models, this should actually be understood as linear in transformations of the covariates. For example, we could use polynomials or splines (continuous piecewise polynomials) of the covariates and allow for much more flexible models.

In fact, because of the penalization term, problems (2.2) and (2.3) remain well-defined and have a unique solution even in high-dimensional problems in which the number of coefficients \(p\) is larger than the sample size \(n\) – that is, our data is “fat” with more columns than rows. These situations can arise either naturally (e.g. genomics problems in which we have hundreds of thousands of gene expression information for a few individuals) or because we are including many transformations of a smaller set of covariates.

Finally, although here we are focusing on regression problems, other generalized linear models such as logistic regression can also be similarly modified by adding a Lasso, Ridge, or Elastic Net-type penalty to similar consequences.

X = data.loc[:,covariates]

Y = data.loc[:,outcome]

from sklearn.linear_model import Lasso

lasso = Lasso()

alphas = np.logspace(np.log10(1e-8), np.log10(1e-1), 100)

tuned_parameters = [{"alpha": alphas}]

n_folds = 10

scorer = make_scorer(mean_squared_error)

clf = GridSearchCV(lasso, tuned_parameters, cv=n_folds, refit=False, scoring=scorer)

clf.fit(X, Y)

scores = clf.cv_results_["mean_test_score"]

scores_std = clf.cv_results_["std_test_score"]

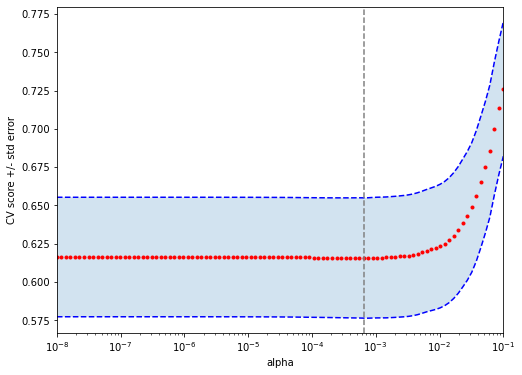

The next figure plots the average estimated MSE for each lambda. The red dots are the averages across all folds, and the error bars are based on the variability of mse estimates across folds. The vertical dashed lines show the (log) lambda with smallest estimated MSE (left) and the one whose mse is at most one standard error from the first (right).

data_lasso = pd.DataFrame([pd.Series(alphas, name= "alphas"), pd.Series(scores, name = "scores")]).T

best = data_lasso[data_lasso["scores"] == np.min(data_lasso["scores"])]

plt.figure().set_size_inches(8, 6)

plt.semilogx(alphas, scores, ".", color = "red")

# plot error lines showing +/- std. errors of the scores

std_error = scores_std / np.sqrt(n_folds)

plt.semilogx(alphas, scores + std_error, "b--")

plt.semilogx(alphas, scores - std_error, "b--")

# alpha=0.2 controls the translucency of the fill color

plt.fill_between(alphas, scores + std_error, scores - std_error, alpha=0.2)

plt.ylabel("CV score +/- std error")

plt.xlabel("alpha")

plt.axvline(best.iloc[0,0], linestyle="--", color=".5")

plt.xlim([alphas[0], alphas[-1]])

(1e-08, 0.1)

Here are the first few estimated coefficients at the \(\lambda\) value that minimizes cross-validated MSE. Note that many estimated coefficients them are exactly zero.

lasso = Lasso(alpha=best.iloc[0,0])

lasso.fit(X,Y)

table = np.zeros((1,5))

table[0,0] = lasso.intercept_

table[0,1] = lasso.coef_[0]

table[0,2] = lasso.coef_[1]

table[0,3] = lasso.coef_[2]

table[0,4] = lasso.coef_[3]

pd.DataFrame(table, columns=['(Intercept)','LOT','UNITSF','BUILT','BATHS'], index=['Coef.'])

| (Intercept) | LOT | UNITSF | BUILT | BATHS | |

|---|---|---|---|---|---|

| Coef. | 11.643421 | 3.494443e-07 | 0.000023 | 0.000229 | 0.246402 |

print("Number of nonzero coefficients at optimal lambda:", len(lasso.coef_[lasso.coef_ != 0]), "out of " , len(lasso.coef_))

Number of nonzero coefficients at optimal lambda: 46 out of 63

Predictions and estimated MSE for the selected model are retrieved as follows.

# Retrieve predictions at best lambda regularization parameter

y_hat = lasso.predict(X)

# Get k-fold cross validation

mse_lasso = best.iloc[0,1]

print("glmnet MSE estimate (k-fold cross-validation):", mse_lasso)

glmnet MSE estimate (k-fold cross-validation): 0.6156670911339063

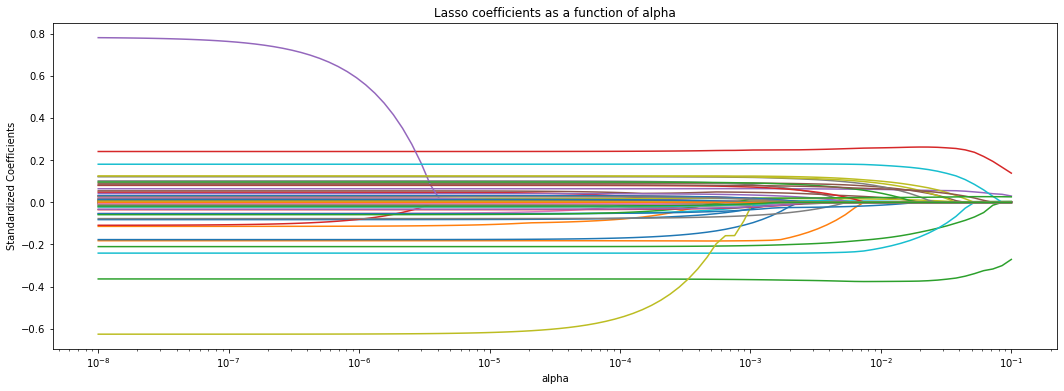

The next command plots estimated coefficients as a function of the regularization parameter \(\lambda\).

coefs = []

for a in alphas:

lasso.set_params(alpha=a)

lasso.fit(X, Y)

coefs.append(lasso.coef_)

from matplotlib.pyplot import figure

plt.figure(figsize=(18,6))

plt.gca().plot(alphas, coefs)

plt.gca().set_xscale('log')

plt.axis('tight')

plt.xlabel('alpha')

plt.ylabel('Standardized Coefficients')

plt.title('Lasso coefficients as a function of alpha');

It’s tempting to try to interpret the coefficients obtained via Lasso. Unfortunately, that can be very difficult, because by dropping covariates Lasso introduces a form of omitted variable bias (wikipedia). To understand this form of bias, consider the following toy example. We have two positively correlated independent variables, x.1 and x.2, that are linearly related to the outcome y. Linear regression of y on x1 and x2 gives us the correct coefficients. However, if we omit x2 from the estimation model, the coefficient on x1 increases. This is because x1 is now “picking up” the effect of the variable that was left out. In other words, the effect of x1 seems stronger because we aren’t controlling for some other confounding variable. Note that the second model this still works for prediction, but we cannot interpret the coefficient as a measure of strength of the causal relationship between x1 and y.

mean = [0.0,0.0]

cov = [[1.5,1],[1,1.5]]

x1, x2 = np.random.multivariate_normal(mean, cov, 100000).T

y = 1 + 2*x1 + 3*x2 + np.random.rand(100000)

data_sim = pd.DataFrame(np.array([x1,x2,y]).T,columns=['x1','x2','y'] )

print('Correct Model')

Correct Model

import statsmodels.formula.api as smf

result = smf.ols('y ~ x1 + x2', data = data_sim).fit()

print(result.summary())

OLS Regression Results

==============================================================================

Dep. Variable: y R-squared: 0.997

Model: OLS Adj. R-squared: 0.997

Method: Least Squares F-statistic: 1.897e+07

Date: Wed, 22 Jun 2022 Prob (F-statistic): 0.00

Time: 20:59:12 Log-Likelihood: -17706.

No. Observations: 100000 AIC: 3.542e+04

Df Residuals: 99997 BIC: 3.545e+04

Df Model: 2

Covariance Type: nonrobust

==============================================================================

coef std err t P>|t| [0.025 0.975]

------------------------------------------------------------------------------

Intercept 1.5012 0.001 1643.500 0.000 1.499 1.503

x1 1.9998 0.001 1996.643 0.000 1.998 2.002

x2 3.0011 0.001 3002.007 0.000 2.999 3.003

==============================================================================

Omnibus: 90005.976 Durbin-Watson: 2.010

Prob(Omnibus): 0.000 Jarque-Bera (JB): 6016.746

Skew: -0.006 Prob(JB): 0.00

Kurtosis: 1.798 Cond. No. 2.24

==============================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

print("Model with omitted variable bias")

result = smf.ols('y ~ x1', data = data_sim).fit()

print(result.summary())

Model with omitted variable bias

OLS Regression Results

==============================================================================

Dep. Variable: y R-squared: 0.760

Model: OLS Adj. R-squared: 0.760

Method: Least Squares F-statistic: 3.174e+05

Date: Wed, 22 Jun 2022 Prob (F-statistic): 0.00

Time: 20:59:21 Log-Likelihood: -2.4332e+05

No. Observations: 100000 AIC: 4.866e+05

Df Residuals: 99998 BIC: 4.867e+05

Df Model: 1

Covariance Type: nonrobust

==============================================================================

coef std err t P>|t| [0.025 0.975]

------------------------------------------------------------------------------

Intercept 1.5107 0.009 173.262 0.000 1.494 1.528

x1 4.0084 0.007 563.401 0.000 3.994 4.022

==============================================================================

Omnibus: 0.159 Durbin-Watson: 2.003

Prob(Omnibus): 0.924 Jarque-Bera (JB): 0.158

Skew: -0.003 Prob(JB): 0.924

Kurtosis: 3.001 Cond. No. 1.23

==============================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

The phenomenon above occurs in Lasso and in any other sparsity-promoting method when correlated covariates are present since, by forcing coefficients to be zero, Lasso is effectively dropping them from the model. And as we have seen, as a variable gets dropped, a different variable that is correlated with it can “pick up” its effect, which in turn can cause bias. Once \(\lambda\) grows sufficiently large, the penalization term overwhelms any benefit of having that variable in the model, so that variable finally decreases to zero too.

One may instead consider using Lasso to select a subset of variables, and then regressing the outcome on the subset of selected variables via OLS (without any penalization). This method is often called post-lasso. Although it has desirable properties in terms of model fit (see e.g., Belloni and Chernozhukov, 2013), this procedure does not solve the omitted variable issue we mentioned above.

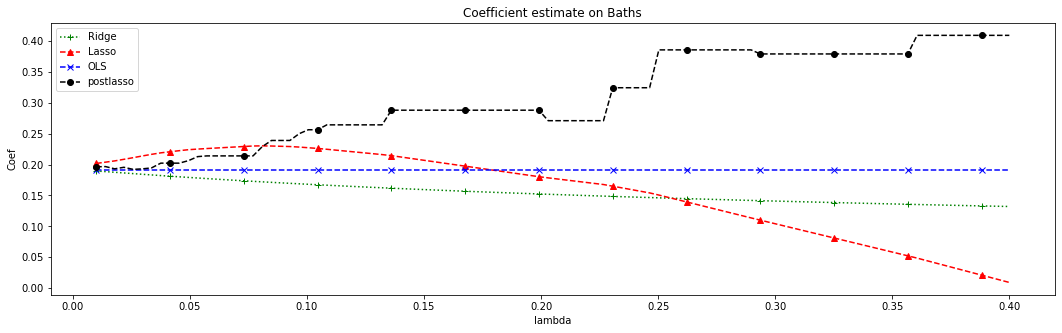

We illustrate this next. We observe the path of the estimated coefficient on the number of bathroooms (BATHS) as we increase \(\lambda\).

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import Ridge

scale_X = StandardScaler().fit(X).transform(X)

ols = LinearRegression()

ols.fit(scale_X,Y)

ols_coef = ols.coef_[3]

lamdas = np.linspace(0.01,0.4, 100)

coef_ols = np.repeat(ols_coef,100)

###############################################

lasso_bath_coef = []

lasso_coefs=[]

for a in lamdas:

lasso.set_params(alpha=a,normalize = False)

lasso.fit(scale_X, Y)

lasso_bath_coef.append(lasso.coef_[3])

lasso_coefs.append(lasso.coef_)

#################################################

ridge_bath_coef = []

for a in lamdas:

ridge = Ridge(alpha=a,normalize = True)

ridge.fit(scale_X, Y)

ridge_bath_coef.append(ridge.coef_[3])

####################################################

poslasso_coef = [ ]

for a in range(100):

scale_X = StandardScaler().fit(X.iloc[:, (lasso_coefs[a] != 0)]).transform(X.iloc[:, (lasso_coefs[a] != 0)])

ols = LinearRegression()

ols.fit(scale_X,Y)

post_coef = ols.coef_[X.iloc[:, (lasso_coefs[a] != 0)].columns.get_loc('BATHS')]

poslasso_coef.append(post_coef )

#################################################

plt.figure(figsize=(18,5))

plt.plot(lamdas, ridge_bath_coef, label = 'Ridge', color = 'g', marker='+', linestyle = ':',markevery=8)

plt.plot(lamdas, lasso_bath_coef, label = 'Lasso', color = 'r', marker = '^',linestyle = 'dashed',markevery=8)

plt.plot(lamdas, coef_ols, label = 'OLS', color = 'b',marker = 'x',linestyle = 'dashed',markevery=8)

plt.plot(lamdas, poslasso_coef, label = 'postlasso',color='black',marker = 'o',linestyle = 'dashed',markevery=8 )

plt.legend()

plt.title("Coefficient estimate on Baths")

plt.ylabel('Coef')

plt.xlabel('lambda')

Text(0.5, 0, 'lambda')

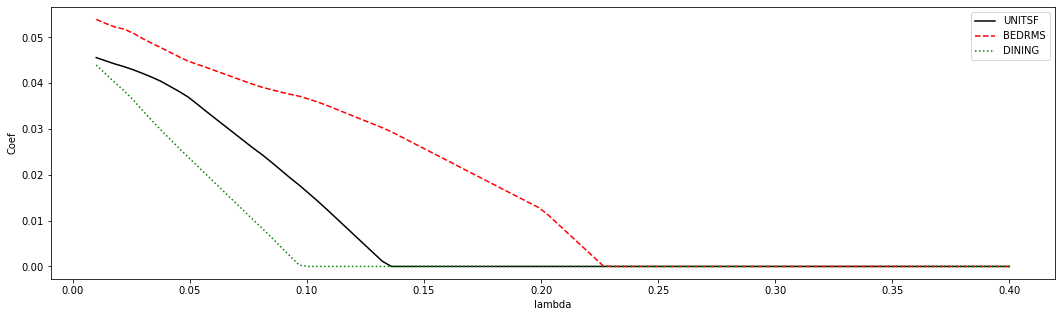

The OLS coefficients are not penalized, so they remain constant. Ridge estimates decrease monotonically as \(\lambda\) grows. Also, for this dataset, Lasso estimates first increase and then decrease. Meanwhile, the post-lasso coefficient estimates seem to behave somewhat erratically with \(lambda\). To understand this behavior, let’s see what happens to the magnitude of other selected variables that are correlated with BATHS.

scale_X = StandardScaler().fit(X).transform(X)

UNITSF_coef = []

BEDRMS_coef = []

DINING_coef = []

for a in lamdas:

lasso.set_params(alpha=a,normalize = False)

lasso.fit(scale_X, Y)

UNITSF_coef.append(lasso.coef_[1])

BEDRMS_coef.append(lasso.coef_[4])

DINING_coef.append(lasso.coef_[5])

plt.figure(figsize=(18,5))

plt.plot(lamdas, UNITSF_coef,label = 'UNITSF', color = 'black' )

plt.plot(lamdas, BEDRMS_coef,label = 'BEDRMS', color = 'red', linestyle = '--')

plt.plot(lamdas, DINING_coef,label = 'DINING', color = 'g',linestyle = 'dotted')

plt.legend()

plt.ylabel('Coef')

plt.xlabel('lambda')

Text(0.5, 0, 'lambda')

Note how the discrete jumps in magnitude for the BATHS coefficient in the first coincide with, for example, variables DINING and BEDRMS being exactly zero. As these variables got dropped from the model, the coefficient on BATHS increased to pick up their effect.

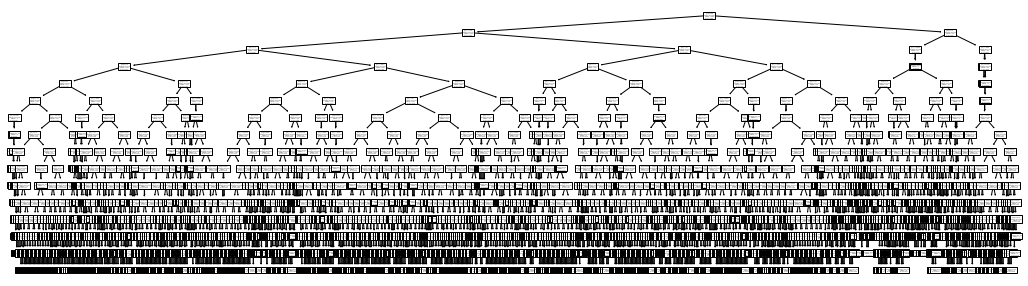

Another problem with Lasso coefficients is their instability. When multiple variables are highly correlated we may spuriously drop several of them. To get a sense of the amount of variability, in the next snippet we fix \(\lambda\) and then look at the lasso coefficients estimated during cross-validation. We see that by simply removing one fold we can get a very different set of coefficients (nonzero coefficients are in black in the heatmap below). This is because there may be many choices of coefficients with similar predictive power, so the set of nonzero coefficients we end up with can be quite unstable.

import itertools

nobs = X.shape[0]

nfold = 10

# Define folds indices

list_1 = [*range(0, nfold, 1)]*nobs

sample = np.random.choice(nobs,nobs, replace=False).tolist()

foldid = [list_1[index] for index in sample]

# Create split function(similar to R)

def split(x, f):

count = max(f) + 1

return tuple( list(itertools.compress(x, (el == i for el in f))) for i in range(count) )

# Split observation indices into folds

list_2 = [*range(0, nobs, 1)]

I = split(list_2, foldid)

from sklearn.linear_model import LassoCV

scale_X = StandardScaler().fit(X).transform(X)

lasso_coef_fold=[]

for b in range(0,len(I)):

# Split data - index to keep are in mask as booleans

include_idx = set(I[b]) #Here should go I[b] Set is more efficient, but doesn't reorder your elements if that is desireable

mask = np.array([(i in include_idx) for i in range(len(X))])

# Lasso regression, excluding folds selected

lassocv = LassoCV(random_state=0)

lassocv.fit(scale_X[~mask], Y[~mask])

lasso_coef_fold.append(lassocv.coef_)

index_val = ['Fold-1','Fold-2','Fold-3','Fold-4','Fold-5','Fold-6','Fold-7','Fold-8','Fold-9','Fold-10']

df = pd.DataFrame(data= lasso_coef_fold, columns=X.columns, index = index_val).T

df.style.applymap(lambda x: "background-color: white" if x==0 else "background-color: black")

| Fold-1 | Fold-2 | Fold-3 | Fold-4 | Fold-5 | Fold-6 | Fold-7 | Fold-8 | Fold-9 | Fold-10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| LOT | 0.041050 | 0.040789 | 0.039105 | 0.037300 | 0.041148 | 0.043150 | 0.037104 | 0.035392 | 0.037300 | 0.037464 |

| UNITSF | 0.044746 | 0.046055 | 0.047095 | 0.045291 | 0.049540 | 0.043839 | 0.043077 | 0.051535 | 0.047132 | 0.046415 |

| BUILT | 0.001111 | 0.004845 | 0.003385 | 0.003564 | 0.004757 | 0.003220 | 0.003449 | 0.002987 | 0.000929 | 0.004401 |

| BATHS | 0.200578 | 0.189623 | 0.195828 | 0.200489 | 0.192490 | 0.198082 | 0.203624 | 0.200081 | 0.198007 | 0.198827 |

| BEDRMS | 0.055605 | 0.057472 | 0.055982 | 0.055394 | 0.054981 | 0.056335 | 0.054475 | 0.049082 | 0.055994 | 0.052763 |

| DINING | 0.047736 | 0.046748 | 0.047269 | 0.044850 | 0.044751 | 0.046515 | 0.044934 | 0.048129 | 0.046415 | 0.046481 |

| METRO | 0.000000 | 0.000356 | 0.000000 | 0.001081 | 0.001190 | 0.000881 | 0.000000 | 0.003189 | 0.001222 | 0.002415 |

| CRACKS | 0.020332 | 0.020937 | 0.017848 | 0.015932 | 0.019917 | 0.019677 | 0.018395 | 0.023793 | 0.020314 | 0.019614 |

| REGION | 0.083864 | 0.083337 | 0.080464 | 0.081884 | 0.081064 | 0.082150 | 0.078420 | 0.082237 | 0.082466 | 0.082625 |

| METRO3 | 0.007152 | 0.006738 | 0.009395 | 0.009017 | 0.010476 | 0.010692 | 0.007217 | 0.008143 | 0.008373 | 0.007819 |

| PHONE | 0.003223 | 0.004145 | 0.000000 | 0.000000 | 0.003644 | 0.001984 | 0.001331 | 0.003200 | 0.001796 | 0.001127 |

| KITCHEN | -0.003205 | -0.000000 | -0.000955 | -0.002583 | -0.007191 | -0.002836 | -0.000000 | -0.003221 | -0.005402 | -0.000577 |

| MOBILTYP | -0.119085 | -0.103709 | -0.118946 | -0.111606 | -0.106277 | -0.113575 | -0.109086 | -0.103446 | -0.114251 | -0.115418 |

| WINTEROVEN | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| WINTERKESP | 0.000000 | -0.000000 | 0.000000 | 0.000000 | -0.000000 | 0.000000 | 0.000000 | -0.000000 | 0.000000 | 0.000000 |

| WINTERELSP | 0.026793 | 0.021703 | 0.025619 | 0.026638 | 0.026866 | 0.024999 | 0.024933 | 0.030121 | 0.026697 | 0.027365 |

| WINTERWOOD | 0.000000 | -0.000000 | 0.000000 | 0.000000 | -0.000000 | 0.000000 | 0.000000 | -0.000000 | 0.000000 | 0.000000 |

| WINTERNONE | -0.006475 | -0.007696 | -0.001862 | -0.000594 | -0.003744 | -0.001674 | -0.002170 | -0.004903 | -0.008437 | -0.001137 |

| NEWC | 0.029223 | 0.027175 | 0.027914 | 0.026626 | 0.027992 | 0.029549 | 0.031211 | 0.027483 | 0.028221 | 0.028651 |

| DISH | -0.096273 | -0.098615 | -0.095563 | -0.093536 | -0.095071 | -0.097641 | -0.094371 | -0.098233 | -0.095227 | -0.096898 |

| WASH | -0.001606 | -0.008013 | -0.012339 | -0.002369 | -0.016570 | -0.002033 | -0.011885 | -0.004852 | -0.007794 | -0.010408 |

| DRY | -0.034784 | -0.032210 | -0.029772 | -0.031367 | -0.027754 | -0.035728 | -0.029114 | -0.029364 | -0.032434 | -0.026725 |

| NUNIT2 | -0.216673 | -0.229393 | -0.213668 | -0.219420 | -0.230576 | -0.219189 | -0.224386 | -0.228164 | -0.217753 | -0.218393 |

| BURNER | -0.000000 | -0.000000 | 0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | 0.000000 | 0.000000 | 0.000000 |

| COOK | -0.000000 | -0.000000 | 0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | 0.000000 | 0.000000 | 0.000000 |

| OVEN | -0.000000 | -0.000000 | 0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | 0.000000 | 0.000000 | 0.000000 |

| REFR | -0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 |

| DENS | 0.048246 | 0.049359 | 0.046588 | 0.047767 | 0.051190 | 0.046928 | 0.046455 | 0.047423 | 0.049179 | 0.048865 |

| FAMRM | 0.057822 | 0.057013 | 0.057238 | 0.059208 | 0.058518 | 0.055123 | 0.057817 | 0.058604 | 0.059895 | 0.057424 |

| HALFB | 0.103928 | 0.102791 | 0.105183 | 0.104379 | 0.103671 | 0.106806 | 0.112708 | 0.104332 | 0.104481 | 0.108234 |

| KITCH | -0.016848 | -0.015641 | -0.015128 | -0.014620 | -0.015921 | -0.015672 | -0.016561 | -0.013676 | -0.016945 | -0.017092 |

| LIVING | 0.005198 | 0.002324 | 0.003951 | 0.004839 | 0.006106 | 0.005630 | 0.003494 | 0.003993 | 0.004532 | 0.004339 |

| OTHFN | 0.038355 | 0.036114 | 0.039843 | 0.035012 | 0.038077 | 0.037492 | 0.034321 | 0.037525 | 0.037721 | 0.035186 |

| RECRM | 0.021484 | 0.021937 | 0.019965 | 0.023502 | 0.024159 | 0.020679 | 0.019380 | 0.020446 | 0.022242 | 0.020969 |

| CLIMB | 0.012317 | 0.006384 | 0.011059 | 0.011721 | 0.016332 | 0.016591 | 0.011285 | 0.013526 | 0.013106 | 0.010781 |

| ELEV | 0.076095 | 0.083937 | 0.078783 | 0.079432 | 0.089403 | 0.078455 | 0.084076 | 0.083452 | 0.082064 | 0.078135 |

| DIRAC | -0.003499 | -0.003454 | -0.002993 | -0.004058 | -0.003754 | -0.002351 | -0.001929 | -0.002463 | -0.001677 | -0.001690 |

| PORCH | -0.018848 | -0.015829 | -0.016723 | -0.014969 | -0.013677 | -0.014311 | -0.015005 | -0.015080 | -0.016535 | -0.013887 |

| AIRSYS | -0.049124 | -0.052072 | -0.052840 | -0.053260 | -0.051097 | -0.050265 | -0.053449 | -0.053212 | -0.052109 | -0.051032 |

| WELL | -0.000000 | 0.000000 | -0.000000 | 0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 |

| WELDUS | -0.024269 | -0.024428 | -0.025118 | -0.022449 | -0.024388 | -0.023465 | -0.022414 | -0.023391 | -0.023995 | -0.026031 |

| STEAM | 0.002214 | 0.003292 | 0.000000 | 0.000000 | 0.002270 | 0.002277 | 0.000000 | 0.004752 | 0.002812 | 0.000000 |

| OARSYS | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| noise1 | 0.005424 | 0.002849 | 0.006610 | 0.003614 | 0.006709 | 0.003801 | 0.002519 | 0.005297 | 0.002566 | 0.005736 |

| noise2 | 0.000000 | -0.000000 | -0.000000 | -0.000000 | 0.000000 | 0.000000 | -0.000000 | 0.000000 | 0.000000 | -0.000000 |

| noise3 | 0.000000 | -0.000000 | -0.000000 | 0.000000 | 0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 |

| noise4 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.001688 | 0.000000 | 0.003442 | 0.000000 | 0.000000 |

| noise5 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000172 |

| noise6 | -0.000805 | -0.001709 | -0.002072 | -0.004038 | -0.001111 | -0.003315 | -0.000000 | -0.004309 | -0.002370 | -0.000000 |

| noise7 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | 0.000000 | 0.000000 | -0.000000 | -0.000000 | -0.000000 | 0.000000 |

| noise8 | 0.003441 | 0.009192 | 0.004116 | 0.002452 | 0.006297 | 0.004724 | 0.005267 | 0.003611 | 0.005380 | 0.002053 |

| noise9 | -0.000000 | 0.000000 | -0.000000 | -0.000000 | -0.000258 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 |

| noise10 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000021 | -0.000000 |

| noise11 | -0.008055 | -0.004641 | -0.005265 | -0.002612 | -0.007669 | -0.005447 | -0.007216 | -0.006012 | -0.007707 | -0.003743 |

| noise12 | -0.006468 | -0.007073 | -0.003561 | -0.002931 | -0.006589 | -0.003944 | -0.005517 | -0.002839 | -0.007282 | -0.005623 |

| noise13 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000212 | 0.000000 | 0.000000 | 0.000000 | 0.002019 | 0.000000 |

| noise14 | -0.000124 | -0.000000 | 0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | 0.000000 | -0.000000 | 0.000000 |

| noise15 | 0.002332 | 0.004505 | 0.004589 | 0.002373 | 0.004535 | 0.003080 | 0.001490 | 0.004166 | 0.004509 | 0.002482 |

| noise16 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | -0.000000 | 0.000000 | 0.000000 | 0.000000 |

| noise17 | -0.002321 | -0.001854 | -0.003085 | -0.001049 | -0.004635 | -0.000000 | -0.000465 | -0.001222 | -0.002072 | -0.002135 |

| noise18 | 0.000274 | 0.000000 | 0.000000 | 0.000704 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.001272 | 0.000000 |

| noise19 | 0.000000 | 0.000000 | -0.000000 | -0.000000 | -0.000000 | -0.000000 | 0.000000 | -0.000000 | -0.000000 | 0.000000 |

| noise20 | -0.000904 | -0.002203 | -0.001322 | -0.000250 | -0.000000 | -0.000180 | -0.001053 | -0.001291 | -0.005082 | -0.000000 |

| ranking | -0.002614 | -0.003632 | -0.000309 | -0.001322 | -0.002222 | -0.000030 | -0.001472 | -0.002578 | -0.000000 | -0.000000 |

As we have seen above, any interpretation needs to take into account the joint distribution of covariates. One possible heuristic is to consider data-driven subgroups. For example, we can analyze what differentiates observations whose predictions are high from those whose predictions are low. The following code estimates a flexible Lasso model with splines, ranks the observations into a few subgroups according to their predicted outcomes, and then estimates the average covariate value for each subgroup.

import itertools

nobs = X.shape[0]

nfold = 5

# Define folds indices

list_1 = [*range(0, nfold, 1)]*nobs

sample = np.random.choice(nobs,nobs, replace=False).tolist()

foldid = [list_1[index] for index in sample]

# Create split function(similar to R)

def split(x, f):

count = max(f) + 1

return tuple( list(itertools.compress(x, (el == i for el in f))) for i in range(count) )

# Split observation indices into folds

list_2 = [*range(0, nobs, 1)]

I = split(list_2, foldid)

lasso_coef_rank=[]

lasso_pred = []

for b in range(0,len(I)):

# Split data - index to keep are in mask as booleans

include_idx = set(I[b]) #Here should go I[b] Set is more efficient, but doesn't reorder your elements if that is desireable

mask = np.array([(i in include_idx) for i in range(len(X))])

# Lasso regression, excluding folds selected

lassocv = LassoCV(random_state=0)

lassocv.fit(scale_X[~mask], Y[~mask])

lasso_coef_rank.append(lassocv.coef_)

lasso_pred.append(lassocv.predict(scale_X[mask]))

y_hat = lasso_pred

df_1 = pd.DataFrame()

for i in [0,1,2,3,4]:

df_2 = pd.DataFrame(y_hat[i])

b =pd.cut(df_2[0], bins =[np.percentile(df_2,0),np.percentile(df_2,25),np.percentile(df_2,50),

np.percentile(df_2,75),np.percentile(df_2,100)], labels = [1,2,3,4])

df_1 = pd.concat([df_1, b])

df_1 =df_1.apply(lambda x: pd.factorize(x)[0])

df_1.rename(columns={0:'ranking'}, inplace=True)

df_1 =df_1.reset_index().drop(columns=['index'])

import statsmodels.api as sm

from scipy.stats import norm

import statsmodels.formula.api as smf

y = X

x = df_1

y = pd.DataFrame(y)

x = pd.DataFrame(x)

y['ranking'] = x

data = y

data_frame = pd.DataFrame()

for var_name in covariates:

form = var_name + " ~ " + "0" + "+" + "C(ranking)"

df1 = smf.ols(formula=form, data=data).fit(cov_type = 'HC2').summary2().tables[1].iloc[1:5, :2] #iloc to stay with rankings 0,1,2,3

df1.insert(0, 'covariate', var_name)

df1.insert(3, 'ranking', ['G1','G2','G3','G4'])

df1.insert(4, 'scaling',

pd.DataFrame(norm.cdf((df1['Coef.'] - np.mean(df1['Coef.']))/np.std(df1['Coef.']))))

df1.insert(5, 'variation',

np.std(df1['Coef.'])/np.std(data[var_name]))

label = []

for j in range(0,4):

label += [str(round(df1['Coef.'][j],3)) + " ("

+ str(round(df1['Std.Err.'][j],3)) + ")"]

df1.insert(6, 'labels', label)

df1.reset_index().drop(columns=['index'])

index = []

for m in range(0,4):

index += [str(df1['covariate'][m]) + "_" + "ranking" + str(m+1)]

idx = pd.Index(index)

df1 = df1.set_index(idx)

data_frame = data_frame.append(df1)

data_frame;

labels_data = pd.DataFrame()

for i in range(1,5):

df_mask = data_frame['ranking']==f"G{i}"

filtered_df = data_frame[df_mask].reset_index().drop(columns=['index'])

labels_data[f"ranking{i}"] = filtered_df[['labels']]

labels_data = labels_data.set_index(pd.Index(covariates))

labels_data

| ranking1 | ranking2 | ranking3 | ranking4 | |

|---|---|---|---|---|

| LOT | 49713.31 (1473.048) | 46479.968 (1390.394) | 47806.63 (1427.658) | 47612.513 (1393.569) |

| UNITSF | 2415.869 (24.944) | 2434.834 (24.249) | 2397.706 (23.467) | 2471.907 (26.208) |

| BUILT | 1972.286 (0.301) | 1974.925 (0.294) | 1973.672 (0.299) | 1973.017 (0.299) |

| BATHS | 1.918 (0.009) | 1.975 (0.009) | 1.946 (0.009) | 1.928 (0.009) |

| BEDRMS | 3.218 (0.01) | 3.258 (0.01) | 3.251 (0.01) | 3.243 (0.01) |

| ... | ... | ... | ... | ... |

| noise16 | 0.499 (0.003) | 0.502 (0.003) | 0.498 (0.003) | 0.505 (0.003) |

| noise17 | 0.501 (0.003) | 0.498 (0.003) | 0.502 (0.003) | 0.498 (0.003) |

| noise18 | 0.502 (0.003) | 0.499 (0.003) | 0.5 (0.003) | 0.5 (0.003) |

| noise19 | 0.504 (0.003) | 0.502 (0.003) | 0.498 (0.003) | 0.497 (0.003) |

| noise20 | 0.502 (0.003) | 0.496 (0.003) | 0.501 (0.003) | 0.5 (0.003) |

63 rows × 4 columns

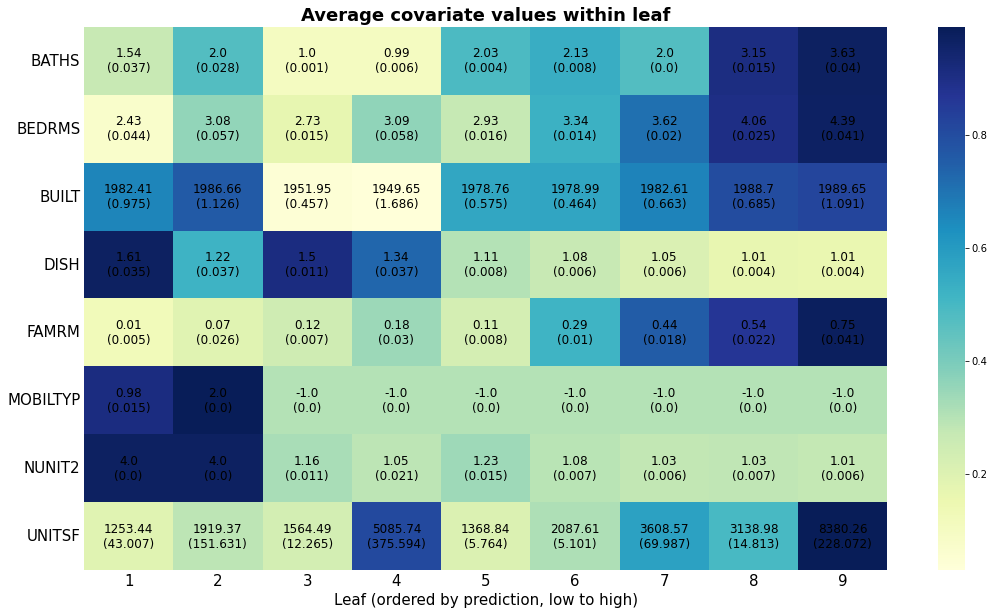

The next heatmap visualizes the results. Note how observations ranked higher (i.e., were predicted to have higher prices) have more bedrooms and baths, were built more recently, have fewer cracks, and so on. The next snippet of code displays the average covariate per group along with each standard errors. The rows are ordered according to \(Var(E[X_{ij} | G_i) / Var(X_i)\), where \(G_i\) denotes the ranking. This is a rough normalized measure of how much variation is “explained” by group membership \(G_i\). Brighter colors indicate larger values.

new_data = pd.DataFrame()

for i in range(0,4):

df_mask = data_frame['ranking']==f"G{i+1}"

filtered_df = data_frame[df_mask]

new_data.insert(i,f"G{i+1}",filtered_df[['scaling']])

new_data;

features = covariates

ranks = ['G1','G2','G3','G4']

harvest = np.array(round(new_data,3))

labels_hm = np.array(round(labels_data))

fig, ax = plt.subplots(figsize=(10,15))

# getting the original colormap using cm.get_cmap() function

orig_map = plt.cm.get_cmap('copper')

# reversing the original colormap using reversed() function

reversed_map = orig_map.reversed()

im = ax.imshow(harvest, cmap=reversed_map, aspect='auto')

# make bar

bar = plt.colorbar(im, shrink=0.2)

# show plot with labels

bar.set_label('scaling')

# Setting the labels

ax.set_xticks(np.arange(len(ranks)))

ax.set_yticks(np.arange(len(features)))

# labeling respective list entries

ax.set_xticklabels(ranks)

ax.set_yticklabels(features)

# Rotate the tick labels and set their alignment.

plt.setp(ax.get_xticklabels(), ha="right",

rotation_mode="anchor")

# Creating text annotations by using for loop

for i in range(len(features)):

for j in range(len(ranks)):

text = ax.text(j, i, labels_hm[i, j],

ha="center", va="center", color="w")

ax.set_title("Average covariate values within group (based on prediction ranking)")

fig.tight_layout()

plt.show()

As we just saw above, houses that have, e.g., been built more recently (BUILT), have more baths (BATHS) are associated with larger price predictions.

This sort of interpretation exercise did not rely on reading any coefficients, and in fact it could also be done using any other flexible method, including decisions trees and forests.

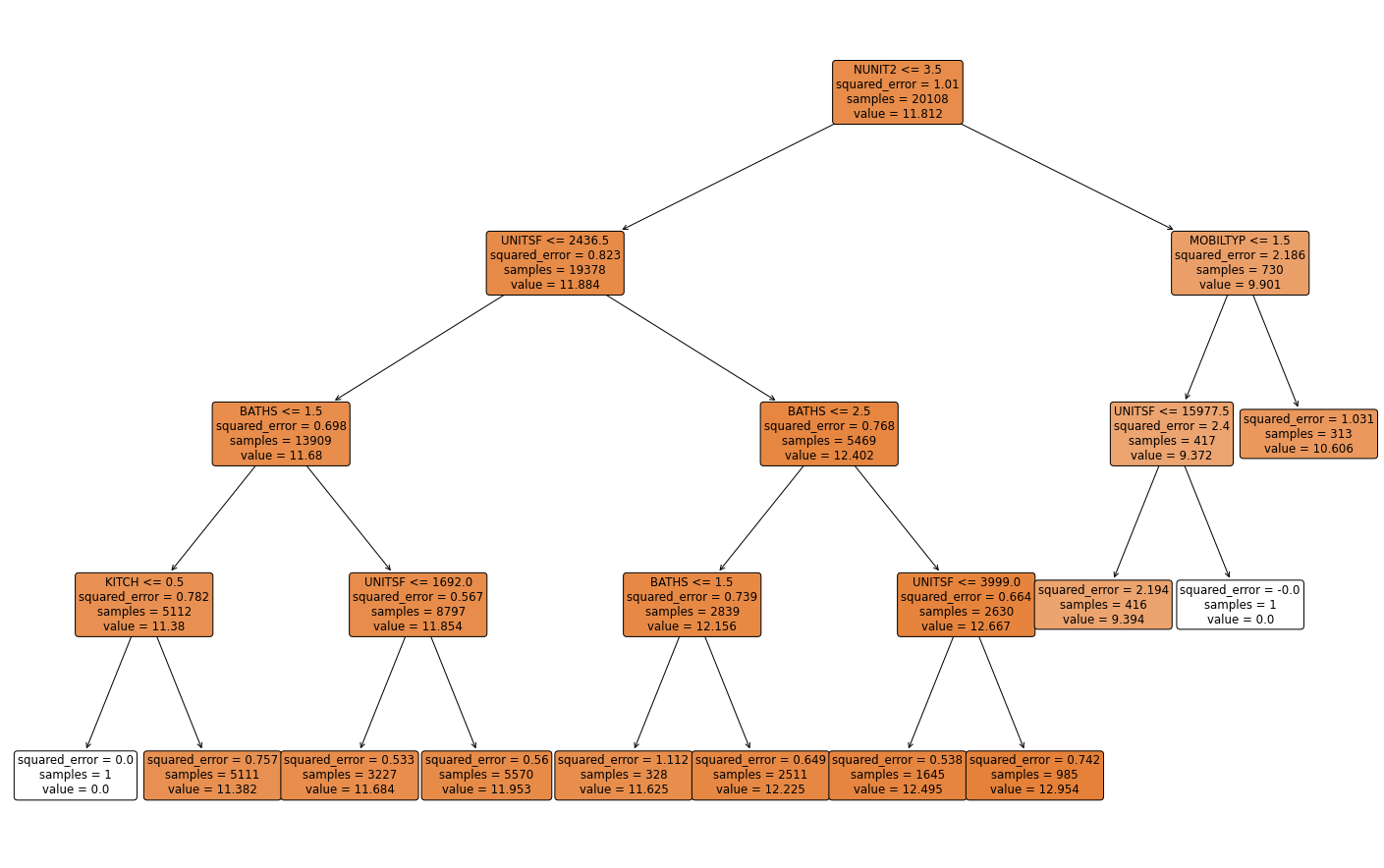

2.2.2. Decision Tree#

This next class of algorithms divides the covariate space into “regions” and estimates a constant prediction within each region.

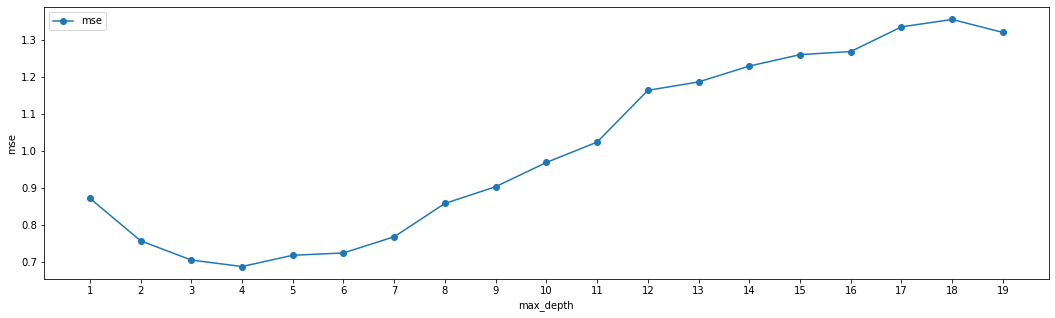

To estimate a decision tree, we following a recursive partition algorithm. At each stage, we select one variable \(j\) and one split point \(s\), and divide the observations into “left” and “right” subsets, depending on whether \(X_{ij} \leq s\) or \(X_{ij} > s\). For regression problems, the variable and split points are often selected so that the sum of the variances of the outcome variable in each “child” subset is smallest. For classification problems, we split to separate the classes. Then, for each child, we separately repeat the process of finding variables and split points. This continues until a minimum subset size is reached, or improvement falls below some threshold.

At prediction time, to find the predictions for some point \(x\), we just follow the tree we just built, going left or right according to the selected variables and split points, until we reach a terminal node. Then, for regression problems, the predicted value at some point \(x\) is the average outcome of the observations in the same partition as the point \(x\). For classification problems, we output the majority class in the node.

from sklearn.tree import DecisionTreeRegressor

import graphviz

from sklearn import tree

from sklearn.tree import export_graphviz

from sklearn.metrics import accuracy_score

from pandas import Series

from simple_colors import *

import statsmodels.api as sm

import statsmodels.formula.api as smf

from scipy.stats import norm

from sklearn.metrics import accuracy_score

from sklearn import metrics

from sklearn.metrics import r2_score

import matplotlib.pyplot as plt

from sklearn import tree

from sklearn.model_selection import train_test_split

#Here we define our X and Y variable

Y = data.loc[:,outcome]

XX = data.loc[:,covariates]

# we split data in train and test

x_train, x_test, y_train, y_test = train_test_split(XX.to_numpy(), Y, test_size=.3)

dt = DecisionTreeRegressor( max_depth=15, random_state=0)

#x_train, x_test, y_train, y_test = train_test_split(XX.to_numpy(), Y, test_size=.3)

tree1 = dt.fit(x_train,y_train)

At this point, we have not constrained the complexity of the tree in any way, so it’s likely too deep and probably overfits. Here’s a plot of what we have so far (without bothering to label the splits to avoid clutter).

from sklearn import tree

plt.figure(figsize=(18,5))

tree.plot_tree(dt)

[Text(0.6937715956946345, 0.96875, 'X[12] <= 0.0\nsquared_error = 0.981\nsamples = 20108\nvalue = 11.814'),

Text(0.45393733459285945, 0.90625, 'X[1] <= 2436.5\nsquared_error = 0.774\nsamples = 19386\nvalue = 11.889'),

Text(0.23878549020890355, 0.84375, 'X[3] <= 1.5\nsquared_error = 0.631\nsamples = 13895\nvalue = 11.687'),

Text(0.11155233658026083, 0.78125, 'X[19] <= 1.5\nsquared_error = 0.705\nsamples = 5094\nvalue = 11.392'),

Text(0.05217431774271454, 0.71875, 'X[29] <= 0.5\nsquared_error = 0.677\nsamples = 2626\nvalue = 11.544'),

Text(0.02204757687972951, 0.65625, 'X[14] <= -3.0\nsquared_error = 0.82\nsamples = 1133\nvalue = 11.421'),

Text(0.0019320560296248591, 0.59375, 'X[47] <= 0.904\nsquared_error = 15.873\nsamples = 7\nvalue = 9.561'),

Text(0.0016100466913540493, 0.53125, 'X[30] <= 1.5\nsquared_error = 0.743\nsamples = 6\nvalue = 11.155'),

Text(0.0012880373530832394, 0.46875, 'X[44] <= 0.392\nsquared_error = 0.256\nsamples = 5\nvalue = 10.829'),

Text(0.0006440186765416197, 0.40625, 'X[1] <= 1536.402\nsquared_error = 0.012\nsamples = 2\nvalue = 10.234'),

Text(0.00032200933827080985, 0.34375, 'squared_error = 0.0\nsamples = 1\nvalue = 10.342'),

Text(0.0009660280148124296, 0.34375, 'squared_error = 0.0\nsamples = 1\nvalue = 10.127'),

Text(0.0019320560296248591, 0.40625, 'X[57] <= 0.52\nsquared_error = 0.027\nsamples = 3\nvalue = 11.226'),

Text(0.0016100466913540493, 0.34375, 'squared_error = 0.0\nsamples = 1\nvalue = 11.002'),

Text(0.0022540653678956688, 0.34375, 'X[58] <= 0.333\nsquared_error = 0.002\nsamples = 2\nvalue = 11.337'),

Text(0.0019320560296248591, 0.28125, 'squared_error = 0.0\nsamples = 1\nvalue = 11.385'),

Text(0.002576074706166479, 0.28125, 'squared_error = -0.0\nsamples = 1\nvalue = 11.29'),

Text(0.0019320560296248591, 0.46875, 'squared_error = 0.0\nsamples = 1\nvalue = 12.782'),

Text(0.0022540653678956688, 0.53125, 'squared_error = -0.0\nsamples = 1\nvalue = 0.0'),

Text(0.04216309772983416, 0.59375, 'X[1] <= 2431.402\nsquared_error = 0.705\nsamples = 1126\nvalue = 11.432'),

Text(0.021499154725487038, 0.53125, 'X[15] <= 1.5\nsquared_error = 0.461\nsamples = 976\nvalue = 11.488'),

Text(0.00612824021896635, 0.46875, 'X[49] <= 0.024\nsquared_error = 1.171\nsamples = 184\nvalue = 11.304'),

Text(0.0038641120592497183, 0.40625, 'X[1] <= 1495.0\nsquared_error = 21.608\nsamples = 5\nvalue = 9.292'),

Text(0.003542102720978908, 0.34375, 'X[1] <= 750.0\nsquared_error = 0.028\nsamples = 4\nvalue = 11.615'),

Text(0.0032200933827080985, 0.28125, 'squared_error = 0.0\nsamples = 1\nvalue = 11.35'),

Text(0.0038641120592497183, 0.28125, 'X[49] <= 0.003\nsquared_error = 0.006\nsamples = 3\nvalue = 11.703'),

Text(0.003542102720978908, 0.21875, 'X[38] <= 1.5\nsquared_error = 0.0\nsamples = 2\nvalue = 11.756'),

Text(0.0032200933827080985, 0.15625, 'squared_error = 0.0\nsamples = 1\nvalue = 11.775'),

Text(0.0038641120592497183, 0.15625, 'squared_error = 0.0\nsamples = 1\nvalue = 11.736'),

Text(0.004186121397520528, 0.21875, 'squared_error = 0.0\nsamples = 1\nvalue = 11.599'),

Text(0.004186121397520528, 0.34375, 'squared_error = 0.0\nsamples = 1\nvalue = 0.0'),

Text(0.008392368378682982, 0.40625, 'X[50] <= 0.067\nsquared_error = 0.484\nsamples = 179\nvalue = 11.361'),

Text(0.0056351634197391726, 0.34375, 'X[48] <= 0.202\nsquared_error = 4.939\nsamples = 7\nvalue = 10.455'),

Text(0.005313154081468363, 0.28125, 'squared_error = 0.0\nsamples = 1\nvalue = 5.075'),

Text(0.005957172758009982, 0.28125, 'X[61] <= 0.559\nsquared_error = 0.136\nsamples = 6\nvalue = 11.351'),

Text(0.005152149412332958, 0.21875, 'X[2] <= 1945.0\nsquared_error = 0.049\nsamples = 4\nvalue = 11.559'),

Text(0.0045081307357913375, 0.15625, 'X[55] <= 0.564\nsquared_error = 0.019\nsamples = 2\nvalue = 11.364'),

Text(0.004186121397520528, 0.09375, 'squared_error = 0.0\nsamples = 1\nvalue = 11.503'),

Text(0.004830140074062148, 0.09375, 'squared_error = -0.0\nsamples = 1\nvalue = 11.225'),

Text(0.005796168088874577, 0.15625, 'X[6] <= 4.0\nsquared_error = 0.003\nsamples = 2\nvalue = 11.754'),

Text(0.005474158750603767, 0.09375, 'squared_error = 0.0\nsamples = 1\nvalue = 11.695'),

Text(0.0061181774271453875, 0.09375, 'squared_error = -0.0\nsamples = 1\nvalue = 11.813'),

Text(0.006762196103687007, 0.21875, 'X[50] <= 0.045\nsquared_error = 0.049\nsamples = 2\nvalue = 10.935'),

Text(0.006440186765416197, 0.15625, 'squared_error = 0.0\nsamples = 1\nvalue = 11.156'),

Text(0.007084205441957816, 0.15625, 'squared_error = 0.0\nsamples = 1\nvalue = 10.714'),

Text(0.01114957333762679, 0.34375, 'X[1] <= 775.0\nsquared_error = 0.268\nsamples = 172\nvalue = 11.397'),

Text(0.008211238125905651, 0.28125, 'X[46] <= 0.405\nsquared_error = 0.917\nsamples = 7\nvalue = 10.553'),

Text(0.007889228787634841, 0.21875, 'squared_error = 0.0\nsamples = 1\nvalue = 8.412'),

Text(0.008533247464176462, 0.21875, 'X[50] <= 0.602\nsquared_error = 0.178\nsamples = 6\nvalue = 10.91'),

Text(0.0077282241184994365, 0.15625, 'X[49] <= 0.622\nsquared_error = 0.055\nsamples = 2\nvalue = 10.362'),

Text(0.007406214780228627, 0.09375, 'squared_error = 0.0\nsamples = 1\nvalue = 10.597'),

Text(0.008050233456770247, 0.09375, 'squared_error = -0.0\nsamples = 1\nvalue = 10.127'),

Text(0.009338270809853486, 0.15625, 'X[0] <= 6500.0\nsquared_error = 0.014\nsamples = 4\nvalue = 11.184'),

Text(0.008694252133311866, 0.09375, 'X[46] <= 0.652\nsquared_error = 0.006\nsamples = 2\nvalue = 11.079'),

Text(0.008372242795041056, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 11.002'),

Text(0.009016261471582675, 0.03125, 'squared_error = -0.0\nsamples = 1\nvalue = 11.156'),

Text(0.009982289486395105, 0.09375, 'X[24] <= 1.5\nsquared_error = 0.0\nsamples = 2\nvalue = 11.29'),

Text(0.009660280148124296, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 11.29'),

Text(0.010304298824665915, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 11.29'),

Text(0.014087908549347931, 0.28125, 'X[57] <= 0.867\nsquared_error = 0.208\nsamples = 165\nvalue = 11.433'),

Text(0.01175334084688456, 0.21875, 'X[52] <= 0.013\nsquared_error = 0.195\nsamples = 140\nvalue = 11.481'),

Text(0.010948317501207535, 0.15625, 'X[47] <= 0.342\nsquared_error = 0.12\nsamples = 2\nvalue = 10.473'),

Text(0.010626308162936726, 0.09375, 'squared_error = 0.0\nsamples = 1\nvalue = 10.82'),

Text(0.011270326839478345, 0.09375, 'squared_error = 0.0\nsamples = 1\nvalue = 10.127'),

Text(0.012558364192561584, 0.15625, 'X[1] <= 912.5\nsquared_error = 0.181\nsamples = 138\nvalue = 11.496'),

Text(0.011914345516019964, 0.09375, 'X[60] <= 0.831\nsquared_error = 0.122\nsamples = 14\nvalue = 11.195'),

Text(0.011592336177749154, 0.03125, 'squared_error = 0.064\nsamples = 12\nvalue = 11.301'),

Text(0.012236354854290775, 0.03125, 'squared_error = 0.002\nsamples = 2\nvalue = 10.558'),

Text(0.013202382869103205, 0.09375, 'X[8] <= 3.5\nsquared_error = 0.176\nsamples = 124\nvalue = 11.53'),

Text(0.012880373530832394, 0.03125, 'squared_error = 0.161\nsamples = 114\nvalue = 11.495'),

Text(0.013524392207374013, 0.03125, 'squared_error = 0.174\nsamples = 10\nvalue = 11.932'),

Text(0.016422476251811303, 0.21875, 'X[62] <= 0.43\nsquared_error = 0.198\nsamples = 25\nvalue = 11.165'),

Text(0.015134438898728063, 0.15625, 'X[43] <= 0.366\nsquared_error = 0.2\nsamples = 10\nvalue = 11.448'),

Text(0.014490420222186443, 0.09375, 'X[52] <= 0.181\nsquared_error = 0.253\nsamples = 4\nvalue = 11.084'),

Text(0.014168410883915633, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 11.918'),

Text(0.014812429560457254, 0.03125, 'squared_error = 0.027\nsamples = 3\nvalue = 10.806'),

Text(0.015778457575269682, 0.09375, 'X[48] <= 0.25\nsquared_error = 0.019\nsamples = 6\nvalue = 11.69'),

Text(0.015456448236998873, 0.03125, 'squared_error = 0.0\nsamples = 2\nvalue = 11.513'),

Text(0.016100466913540494, 0.03125, 'squared_error = 0.005\nsamples = 4\nvalue = 11.779'),

Text(0.01771051360489454, 0.15625, 'X[60] <= 0.278\nsquared_error = 0.108\nsamples = 15\nvalue = 10.976'),

Text(0.017066494928352924, 0.09375, 'X[0] <= 25034.602\nsquared_error = 0.027\nsamples = 3\nvalue = 10.514'),

Text(0.01674448559008211, 0.03125, 'squared_error = 0.01\nsamples = 2\nvalue = 10.617'),

Text(0.017388504266623733, 0.03125, 'squared_error = -0.0\nsamples = 1\nvalue = 10.309'),

Text(0.018354532281436162, 0.09375, 'X[47] <= 0.262\nsquared_error = 0.062\nsamples = 12\nvalue = 11.091'),

Text(0.01803252294316535, 0.03125, 'squared_error = 0.011\nsamples = 4\nvalue = 11.388'),

Text(0.01867654161970697, 0.03125, 'squared_error = 0.021\nsamples = 8\nvalue = 10.943'),

Text(0.03687006923200773, 0.46875, 'X[34] <= 2.154\nsquared_error = 0.286\nsamples = 792\nvalue = 11.53'),

Text(0.02849782643696667, 0.40625, 'X[47] <= 0.991\nsquared_error = 0.347\nsamples = 81\nvalue = 11.265'),

Text(0.028175817098695863, 0.34375, 'X[46] <= 0.888\nsquared_error = 0.282\nsamples = 80\nvalue = 11.295'),

Text(0.024150700370310738, 0.28125, 'X[46] <= 0.465\nsquared_error = 0.247\nsamples = 69\nvalue = 11.216'),

Text(0.02157462566414426, 0.21875, 'X[60] <= 0.197\nsquared_error = 0.203\nsamples = 43\nvalue = 11.374'),

Text(0.020286588311061022, 0.15625, 'X[8] <= 3.5\nsquared_error = 0.137\nsamples = 6\nvalue = 10.849'),

Text(0.0196425696345194, 0.09375, 'X[51] <= 0.46\nsquared_error = 0.013\nsamples = 3\nvalue = 11.196'),

Text(0.019320560296248592, 0.03125, 'squared_error = 0.001\nsamples = 2\nvalue = 11.119'),

Text(0.01996457897279021, 0.03125, 'squared_error = -0.0\nsamples = 1\nvalue = 11.35'),

Text(0.02093060698760264, 0.09375, 'X[4] <= 1.5\nsquared_error = 0.018\nsamples = 3\nvalue = 10.501'),

Text(0.02060859764933183, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 10.309'),

Text(0.02125261632587345, 0.03125, 'squared_error = 0.0\nsamples = 2\nvalue = 10.597'),

Text(0.0228626630172275, 0.15625, 'X[47] <= 0.935\nsquared_error = 0.162\nsamples = 37\nvalue = 11.459'),

Text(0.02221864434068588, 0.09375, 'X[44] <= 0.052\nsquared_error = 0.126\nsamples = 35\nvalue = 11.506'),

Text(0.02189663500241507, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 10.463'),

Text(0.02254065367895669, 0.03125, 'squared_error = 0.097\nsamples = 34\nvalue = 11.537'),

Text(0.02350668169376912, 0.09375, 'X[34] <= 0.5\nsquared_error = 0.055\nsamples = 2\nvalue = 10.624'),

Text(0.023184672355498308, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 10.859'),

Text(0.02382869103203993, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 10.389'),

Text(0.026726775076477218, 0.21875, 'X[48] <= 0.556\nsquared_error = 0.211\nsamples = 26\nvalue = 10.956'),

Text(0.02543873772339398, 0.15625, 'X[51] <= 0.711\nsquared_error = 0.184\nsamples = 16\nvalue = 10.775'),

Text(0.02479471904685236, 0.09375, 'X[56] <= 0.506\nsquared_error = 0.144\nsamples = 13\nvalue = 10.653'),

Text(0.02447270970858155, 0.03125, 'squared_error = 0.09\nsamples = 6\nvalue = 10.381'),

Text(0.025116728385123167, 0.03125, 'squared_error = 0.074\nsamples = 7\nvalue = 10.886'),

Text(0.026082756399935597, 0.09375, 'X[62] <= 0.298\nsquared_error = 0.012\nsamples = 3\nvalue = 11.305'),

Text(0.02576074706166479, 0.03125, 'squared_error = 0.001\nsamples = 2\nvalue = 11.379'),

Text(0.02640476573820641, 0.03125, 'squared_error = -0.0\nsamples = 1\nvalue = 11.156'),

Text(0.028014812429560457, 0.15625, 'X[62] <= 0.479\nsquared_error = 0.118\nsamples = 10\nvalue = 11.245'),

Text(0.02737079375301884, 0.09375, 'X[54] <= 0.347\nsquared_error = 0.045\nsamples = 6\nvalue = 11.44'),

Text(0.027048784414748027, 0.03125, 'squared_error = 0.004\nsamples = 3\nvalue = 11.245'),

Text(0.027692803091289648, 0.03125, 'squared_error = 0.011\nsamples = 3\nvalue = 11.634'),

Text(0.028658831106102078, 0.09375, 'X[41] <= 0.5\nsquared_error = 0.086\nsamples = 4\nvalue = 10.954'),

Text(0.028336821767831265, 0.03125, 'squared_error = 0.027\nsamples = 3\nvalue = 10.806'),

Text(0.028980840444372886, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 11.396'),

Text(0.03220093382708099, 0.28125, 'X[2] <= 1970.0\nsquared_error = 0.216\nsamples = 11\nvalue = 11.788'),

Text(0.030912896473997746, 0.21875, 'X[54] <= 0.653\nsquared_error = 0.112\nsamples = 6\nvalue = 12.113'),

Text(0.030590887135726937, 0.15625, 'X[1] <= 1050.0\nsquared_error = 0.032\nsamples = 5\nvalue = 12.244'),

Text(0.029946868459185316, 0.09375, 'X[50] <= 0.725\nsquared_error = 0.007\nsamples = 3\nvalue = 12.371'),

Text(0.029624859120914507, 0.03125, 'squared_error = 0.0\nsamples = 2\nvalue = 12.429'),

Text(0.030268877797456125, 0.03125, 'squared_error = -0.0\nsamples = 1\nvalue = 12.255'),

Text(0.031234905812268555, 0.09375, 'X[43] <= 0.796\nsquared_error = 0.01\nsamples = 2\nvalue = 12.053'),

Text(0.030912896473997746, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 12.155'),

Text(0.031556915150539364, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 11.951'),

Text(0.031234905812268555, 0.15625, 'squared_error = -0.0\nsamples = 1\nvalue = 11.462'),

Text(0.03348897118016422, 0.21875, 'X[49] <= 0.805\nsquared_error = 0.06\nsamples = 5\nvalue = 11.397'),

Text(0.03316696184189342, 0.15625, 'X[48] <= 0.568\nsquared_error = 0.018\nsamples = 4\nvalue = 11.504'),

Text(0.03252294316535179, 0.09375, 'X[2] <= 1990.0\nsquared_error = 0.004\nsamples = 2\nvalue = 11.628'),

Text(0.03220093382708099, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 11.562'),

Text(0.032844952503622606, 0.03125, 'squared_error = -0.0\nsamples = 1\nvalue = 11.695'),

Text(0.033810980518435035, 0.09375, 'X[4] <= 1.5\nsquared_error = 0.001\nsamples = 2\nvalue = 11.379'),

Text(0.03348897118016422, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 11.35'),

Text(0.03413298985670585, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 11.408'),

Text(0.033810980518435035, 0.15625, 'squared_error = -0.0\nsamples = 1\nvalue = 10.968'),

Text(0.02881983577523748, 0.34375, 'squared_error = -0.0\nsamples = 1\nvalue = 8.923'),

Text(0.04524231202704879, 0.40625, 'X[8] <= 3.5\nsquared_error = 0.27\nsamples = 711\nvalue = 11.56'),

Text(0.04053292545483819, 0.34375, 'X[42] <= 1.5\nsquared_error = 0.261\nsamples = 642\nvalue = 11.533'),

Text(0.03823860891965867, 0.28125, 'X[62] <= 0.829\nsquared_error = 0.356\nsamples = 113\nvalue = 11.317'),

Text(0.03687006923200773, 0.21875, 'X[2] <= 1919.5\nsquared_error = 0.309\nsamples = 94\nvalue = 11.235'),

Text(0.035743036548059895, 0.15625, 'X[6] <= 4.0\nsquared_error = 0.18\nsamples = 6\nvalue = 10.371'),

Text(0.03509901787151828, 0.09375, 'X[43] <= 0.405\nsquared_error = 0.08\nsamples = 3\nvalue = 10.006'),

Text(0.034777008533247465, 0.03125, 'squared_error = 0.006\nsamples = 2\nvalue = 10.201'),

Text(0.03542102720978908, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 9.616'),

Text(0.03638705522460151, 0.09375, 'X[48] <= 0.894\nsquared_error = 0.014\nsamples = 3\nvalue = 10.737'),

Text(0.0360650458863307, 0.03125, 'squared_error = 0.0\nsamples = 2\nvalue = 10.82'),

Text(0.036709064562872325, 0.03125, 'squared_error = -0.0\nsamples = 1\nvalue = 10.571'),

Text(0.03799710191595556, 0.15625, 'X[5] <= 1.5\nsquared_error = 0.263\nsamples = 88\nvalue = 11.294'),

Text(0.037675092577684755, 0.09375, 'X[59] <= 0.019\nsquared_error = 0.234\nsamples = 87\nvalue = 11.314'),

Text(0.03735308323941394, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 9.903'),

Text(0.03799710191595556, 0.03125, 'squared_error = 0.213\nsamples = 86\nvalue = 11.33'),

Text(0.03831911125422637, 0.09375, 'squared_error = 0.0\nsamples = 1\nvalue = 9.616'),

Text(0.039607148607309614, 0.21875, 'X[0] <= 269841.5\nsquared_error = 0.389\nsamples = 19\nvalue = 11.722'),

Text(0.0392851392690388, 0.15625, 'X[9] <= 5.5\nsquared_error = 0.161\nsamples = 18\nvalue = 11.607'),

Text(0.03896312993076799, 0.09375, 'X[56] <= 0.846\nsquared_error = 0.112\nsamples = 17\nvalue = 11.55'),

Text(0.038641120592497184, 0.03125, 'squared_error = 0.078\nsamples = 14\nvalue = 11.457'),

Text(0.0392851392690388, 0.03125, 'squared_error = 0.04\nsamples = 3\nvalue = 11.983'),

Text(0.039607148607309614, 0.09375, 'squared_error = 0.0\nsamples = 1\nvalue = 12.578'),

Text(0.03992915794558042, 0.15625, 'squared_error = -0.0\nsamples = 1\nvalue = 13.786'),

Text(0.04282724199001771, 0.28125, 'X[1] <= 1060.0\nsquared_error = 0.229\nsamples = 529\nvalue = 11.579'),

Text(0.041539204636934474, 0.21875, 'X[52] <= 0.971\nsquared_error = 0.178\nsamples = 162\nvalue = 11.447'),

Text(0.040573176622122044, 0.15625, 'X[56] <= 0.006\nsquared_error = 0.161\nsamples = 159\nvalue = 11.465'),

Text(0.04025116728385123, 0.09375, 'squared_error = 0.0\nsamples = 1\nvalue = 13.017'),

Text(0.04089518596039285, 0.09375, 'X[2] <= 2000.0\nsquared_error = 0.147\nsamples = 158\nvalue = 11.455'),

Text(0.040573176622122044, 0.03125, 'squared_error = 0.132\nsamples = 152\nvalue = 11.43'),

Text(0.04121719529866366, 0.03125, 'squared_error = 0.107\nsamples = 6\nvalue = 12.086'),

Text(0.0425052326517469, 0.15625, 'X[49] <= 0.168\nsquared_error = 0.201\nsamples = 3\nvalue = 10.523'),

Text(0.04218322331347609, 0.09375, 'X[56] <= 0.292\nsquared_error = 0.014\nsamples = 2\nvalue = 10.833'),

Text(0.04186121397520528, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 10.951'),

Text(0.0425052326517469, 0.03125, 'squared_error = -0.0\nsamples = 1\nvalue = 10.714'),

Text(0.04282724199001771, 0.09375, 'squared_error = 0.0\nsamples = 1\nvalue = 9.903'),

Text(0.04411527934310095, 0.21875, 'X[39] <= 1.5\nsquared_error = 0.241\nsamples = 367\nvalue = 11.637'),

Text(0.04379327000483014, 0.15625, 'X[56] <= 0.999\nsquared_error = 0.234\nsamples = 366\nvalue = 11.633'),

Text(0.04347126066655933, 0.09375, 'X[62] <= 0.053\nsquared_error = 0.229\nsamples = 365\nvalue = 11.629'),

Text(0.04314925132828852, 0.03125, 'squared_error = 0.176\nsamples = 14\nvalue = 11.983'),

Text(0.04379327000483014, 0.03125, 'squared_error = 0.226\nsamples = 351\nvalue = 11.615'),

Text(0.04411527934310095, 0.09375, 'squared_error = -0.0\nsamples = 1\nvalue = 13.039'),

Text(0.04443728868137176, 0.15625, 'squared_error = 0.0\nsamples = 1\nvalue = 13.305'),

Text(0.04995169859925938, 0.34375, 'X[22] <= 1.5\nsquared_error = 0.281\nsamples = 69\nvalue = 11.816'),

Text(0.047818386733215264, 0.28125, 'X[61] <= 0.94\nsquared_error = 0.208\nsamples = 54\nvalue = 11.971'),

Text(0.04669135404926743, 0.21875, 'X[62] <= 0.646\nsquared_error = 0.182\nsamples = 52\nvalue = 11.937'),

Text(0.04540331669618419, 0.15625, 'X[45] <= 0.63\nsquared_error = 0.123\nsamples = 26\nvalue = 12.108'),

Text(0.04475929801964257, 0.09375, 'X[45] <= 0.186\nsquared_error = 0.071\nsamples = 15\nvalue = 12.273'),

Text(0.04443728868137176, 0.03125, 'squared_error = 0.004\nsamples = 4\nvalue = 11.956'),

Text(0.04508130735791338, 0.03125, 'squared_error = 0.046\nsamples = 11\nvalue = 12.388'),

Text(0.04604733537272581, 0.09375, 'X[44] <= 0.637\nsquared_error = 0.105\nsamples = 11\nvalue = 11.884'),

Text(0.045725326034455, 0.03125, 'squared_error = 0.007\nsamples = 3\nvalue = 12.236'),

Text(0.046369344710996616, 0.03125, 'squared_error = 0.078\nsamples = 8\nvalue = 11.752'),

Text(0.04797939140235067, 0.15625, 'X[1] <= 1310.0\nsquared_error = 0.183\nsamples = 26\nvalue = 11.765'),

Text(0.047335372725809045, 0.09375, 'X[47] <= 0.536\nsquared_error = 0.156\nsamples = 14\nvalue = 11.539'),

Text(0.04701336338753824, 0.03125, 'squared_error = 0.121\nsamples = 7\nvalue = 11.264'),

Text(0.04765738206407986, 0.03125, 'squared_error = 0.04\nsamples = 7\nvalue = 11.814'),

Text(0.04862341007889229, 0.09375, 'X[10] <= 1.5\nsquared_error = 0.086\nsamples = 12\nvalue = 12.029'),

Text(0.048301400740621475, 0.03125, 'squared_error = 0.04\nsamples = 11\nvalue = 12.097'),

Text(0.0489454194171631, 0.03125, 'squared_error = -0.0\nsamples = 1\nvalue = 11.29'),

Text(0.0489454194171631, 0.21875, 'X[59] <= 0.209\nsquared_error = 0.061\nsamples = 2\nvalue = 12.859'),

Text(0.04862341007889229, 0.15625, 'squared_error = 0.0\nsamples = 1\nvalue = 13.106'),

Text(0.049267428755433905, 0.15625, 'squared_error = 0.0\nsamples = 1\nvalue = 12.612'),

Text(0.05208501046530349, 0.28125, 'X[58] <= 0.37\nsquared_error = 0.144\nsamples = 15\nvalue = 11.257'),

Text(0.05055546610851715, 0.21875, 'X[45] <= 0.784\nsquared_error = 0.022\nsamples = 5\nvalue = 10.835'),

Text(0.04991144743197553, 0.15625, 'X[60] <= 0.356\nsquared_error = 0.005\nsamples = 3\nvalue = 10.936'),

Text(0.04958943809370472, 0.09375, 'squared_error = 0.0\nsamples = 1\nvalue = 11.035'),

Text(0.050233456770246335, 0.09375, 'X[18] <= -4.0\nsquared_error = 0.001\nsamples = 2\nvalue = 10.887'),

Text(0.04991144743197553, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 10.915'),

Text(0.05055546610851715, 0.03125, 'squared_error = -0.0\nsamples = 1\nvalue = 10.859'),

Text(0.051199484785058764, 0.15625, 'X[45] <= 0.898\nsquared_error = 0.007\nsamples = 2\nvalue = 10.683'),

Text(0.05087747544678796, 0.09375, 'squared_error = 0.0\nsamples = 1\nvalue = 10.768'),

Text(0.05152149412332958, 0.09375, 'squared_error = 0.0\nsamples = 1\nvalue = 10.597'),

Text(0.05361455482208984, 0.21875, 'X[45] <= 0.849\nsquared_error = 0.071\nsamples = 10\nvalue = 11.468'),

Text(0.05280953147641282, 0.15625, 'X[45] <= 0.41\nsquared_error = 0.048\nsamples = 8\nvalue = 11.377'),

Text(0.052165512799871194, 0.09375, 'X[51] <= 0.308\nsquared_error = 0.007\nsamples = 2\nvalue = 11.694'),

Text(0.05184350346160039, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 11.613'),

Text(0.052487522138142007, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 11.775'),

Text(0.053453550152954436, 0.09375, 'X[58] <= 0.812\nsquared_error = 0.017\nsamples = 6\nvalue = 11.271'),

Text(0.053131540814683624, 0.03125, 'squared_error = 0.006\nsamples = 5\nvalue = 11.222'),

Text(0.05377555949122525, 0.03125, 'squared_error = -0.0\nsamples = 1\nvalue = 11.513'),

Text(0.054419578167766866, 0.15625, 'X[46] <= 0.191\nsquared_error = 0.0\nsamples = 2\nvalue = 11.831'),

Text(0.054097568829496054, 0.09375, 'squared_error = 0.0\nsamples = 1\nvalue = 11.849'),

Text(0.05474158750603768, 0.09375, 'squared_error = 0.0\nsamples = 1\nvalue = 11.813'),

Text(0.06282704073418129, 0.53125, 'X[59] <= 0.013\nsquared_error = 2.146\nsamples = 150\nvalue = 11.071'),

Text(0.06160441152793431, 0.46875, 'X[4] <= 2.5\nsquared_error = 28.394\nsamples = 2\nvalue = 5.329'),

Text(0.0612824021896635, 0.40625, 'squared_error = 0.0\nsamples = 1\nvalue = 10.657'),

Text(0.06192642086620512, 0.40625, 'squared_error = 0.0\nsamples = 1\nvalue = 0.0'),

Text(0.06404966994042827, 0.46875, 'X[11] <= 1.5\nsquared_error = 1.339\nsamples = 148\nvalue = 11.148'),

Text(0.06257043954274674, 0.40625, 'X[50] <= 0.995\nsquared_error = 0.5\nsamples = 146\nvalue = 11.223'),

Text(0.0605780067621961, 0.34375, 'X[62] <= 0.943\nsquared_error = 0.389\nsamples = 143\nvalue = 11.253'),

Text(0.05820318789244888, 0.28125, 'X[49] <= 0.07\nsquared_error = 0.359\nsamples = 134\nvalue = 11.304'),

Text(0.05683464820479794, 0.21875, 'X[61] <= 0.706\nsquared_error = 1.462\nsamples = 8\nvalue = 10.542'),

Text(0.05602962485912091, 0.15625, 'X[0] <= 8250.0\nsquared_error = 0.459\nsamples = 6\nvalue = 11.15'),

Text(0.055385606182579296, 0.09375, 'X[53] <= 0.44\nsquared_error = 0.038\nsamples = 3\nvalue = 10.517'),

Text(0.055063596844308484, 0.03125, 'squared_error = 0.006\nsamples = 2\nvalue = 10.386'),

Text(0.05570761552085011, 0.03125, 'squared_error = -0.0\nsamples = 1\nvalue = 10.779'),

Text(0.05667364353566253, 0.09375, 'X[57] <= 0.517\nsquared_error = 0.08\nsamples = 3\nvalue = 11.782'),

Text(0.056351634197391726, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 12.15'),

Text(0.05699565287393334, 0.03125, 'squared_error = 0.019\nsamples = 2\nvalue = 11.599'),

Text(0.05763967155047496, 0.15625, 'X[33] <= 0.5\nsquared_error = 0.041\nsamples = 2\nvalue = 8.72'),

Text(0.057317662212204155, 0.09375, 'squared_error = 0.0\nsamples = 1\nvalue = 8.923'),

Text(0.05796168088874577, 0.09375, 'squared_error = 0.0\nsamples = 1\nvalue = 8.517'),

Text(0.05957172758009982, 0.21875, 'X[48] <= 0.996\nsquared_error = 0.25\nsamples = 126\nvalue = 11.353'),

Text(0.059249718241829015, 0.15625, 'X[45] <= 0.924\nsquared_error = 0.218\nsamples = 125\nvalue = 11.369'),

Text(0.05860569956528739, 0.09375, 'X[45] <= 0.844\nsquared_error = 0.21\nsamples = 119\nvalue = 11.397'),

Text(0.058283690227016585, 0.03125, 'squared_error = 0.201\nsamples = 111\nvalue = 11.367'),

Text(0.0589277089035582, 0.03125, 'squared_error = 0.142\nsamples = 8\nvalue = 11.813'),

Text(0.05989373691837063, 0.09375, 'X[38] <= 1.5\nsquared_error = 0.065\nsamples = 6\nvalue = 10.82'),

Text(0.05957172758009982, 0.03125, 'squared_error = 0.0\nsamples = 4\nvalue = 10.994'),

Text(0.060215746256641445, 0.03125, 'squared_error = 0.015\nsamples = 2\nvalue = 10.474'),

Text(0.05989373691837063, 0.15625, 'squared_error = -0.0\nsamples = 1\nvalue = 9.306'),

Text(0.06295282563194332, 0.28125, 'X[53] <= 0.76\nsquared_error = 0.221\nsamples = 9\nvalue = 10.494'),

Text(0.06263081629367252, 0.21875, 'X[50] <= 0.901\nsquared_error = 0.108\nsamples = 8\nvalue = 10.369'),

Text(0.06182579294799549, 0.15625, 'X[57] <= 0.575\nsquared_error = 0.013\nsamples = 6\nvalue = 10.535'),

Text(0.061181774271453875, 0.09375, 'X[41] <= 0.5\nsquared_error = 0.003\nsamples = 3\nvalue = 10.636'),

Text(0.06085976493318306, 0.03125, 'squared_error = 0.0\nsamples = 2\nvalue = 10.597'),

Text(0.06150378360972468, 0.03125, 'squared_error = 0.0\nsamples = 1\nvalue = 10.714'),

Text(0.06246981162453711, 0.09375, 'X[52] <= 0.83\nsquared_error = 0.002\nsamples = 3\nvalue = 10.433'),

Text(0.062147802286266304, 0.03125, 'squared_error = 0.0\nsamples = 2\nvalue = 10.463'),

Text(0.06279182096280791, 0.03125, 'squared_error = -0.0\nsamples = 1\nvalue = 10.373'),

Text(0.06343583963934954, 0.15625, 'X[54] <= 0.47\nsquared_error = 0.065\nsamples = 2\nvalue = 9.871'),

Text(0.06311383030107873, 0.09375, 'squared_error = 0.0\nsamples = 1\nvalue = 10.127'),

Text(0.06375784897762035, 0.09375, 'squared_error = 0.0\nsamples = 1\nvalue = 9.616'),

Text(0.06327483497021413, 0.21875, 'squared_error = 0.0\nsamples = 1\nvalue = 11.493'),

Text(0.06456287232329738, 0.34375, 'X[48] <= 0.548\nsquared_error = 3.655\nsamples = 3\nvalue = 9.772'),

Text(0.06424086298502657, 0.28125, 'X[50] <= 0.997\nsquared_error = 0.086\nsamples = 2\nvalue = 11.114'),

Text(0.06391885364675576, 0.21875, 'squared_error = 0.0\nsamples = 1\nvalue = 10.82'),

Text(0.06456287232329738, 0.21875, 'squared_error = -0.0\nsamples = 1\nvalue = 11.408'),

Text(0.06488488166156818, 0.28125, 'squared_error = 0.0\nsamples = 1\nvalue = 7.09'),

Text(0.0655289003381098, 0.40625, 'X[52] <= 0.705\nsquared_error = 32.469\nsamples = 2\nvalue = 5.698'),

Text(0.06520689099983899, 0.34375, 'squared_error = 0.0\nsamples = 1\nvalue = 11.396'),

Text(0.06585090967638062, 0.34375, 'squared_error = 0.0\nsamples = 1\nvalue = 0.0'),