21. Debiased ML for Partially Linear Model in R#

#install and load package

install.packages("dagitty")

install.packages("ggdag")

library(dagitty)

library(ggdag)

Installing package into ‘/usr/local/lib/R/site-library’

(as ‘lib’ is unspecified)

also installing the dependencies ‘Rcpp’, ‘V8’

Installing package into ‘/usr/local/lib/R/site-library’

(as ‘lib’ is unspecified)

also installing the dependencies ‘tweenr’, ‘polyclip’, ‘RcppEigen’, ‘gridExtra’, ‘RcppArmadillo’, ‘ggforce’, ‘viridis’, ‘graphlayouts’, ‘ggraph’, ‘ggrepel’, ‘igraph’, ‘tidygraph’

Attaching package: ‘ggdag’

The following object is masked from ‘package:stats’:

filter

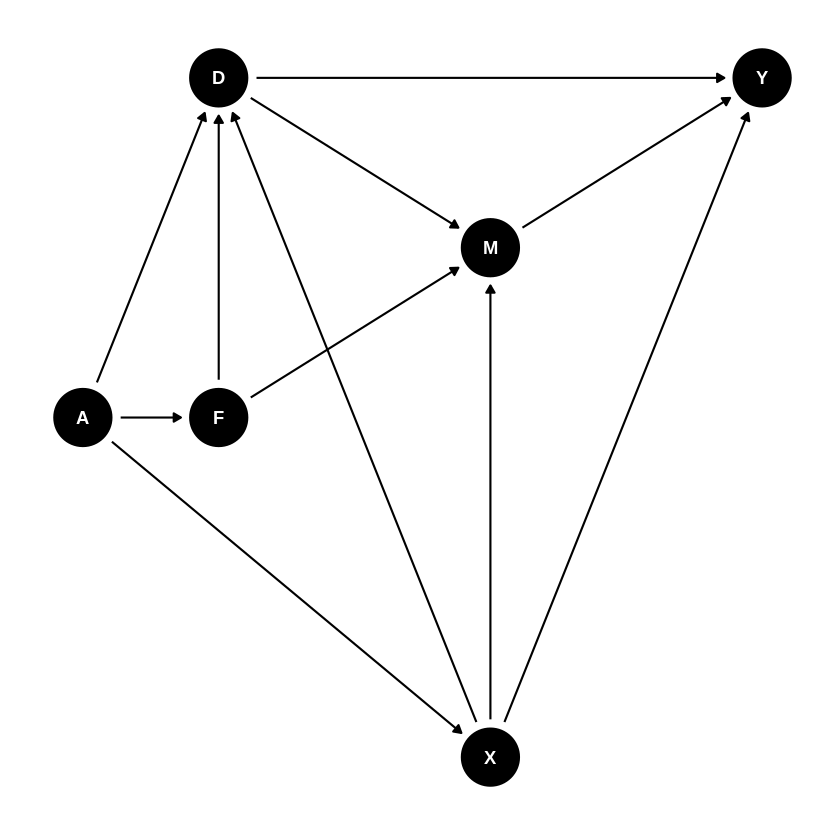

Graphs for 401(K) Analsyis

Here we have

\(Y\) – net financial assets;

\(X\) – worker characteristics (income, family size, other retirement plans; see lecture notes for details);

\(F\) – latent (unobserved) firm characteristics

\(D\) – 401(K) eligibility, deterimined by \(F\) and \(X\)

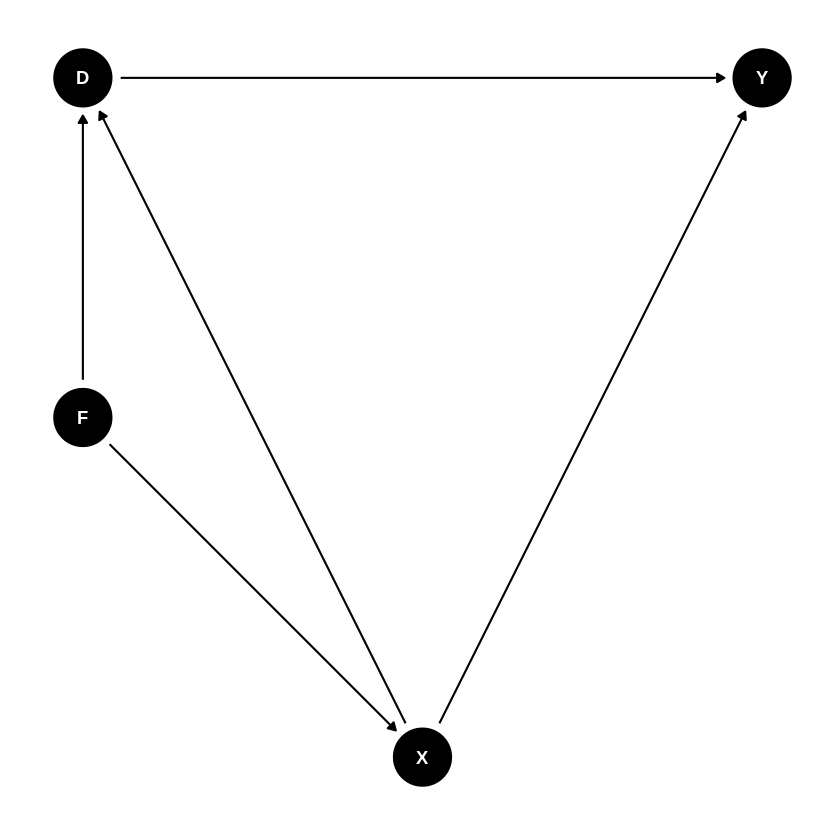

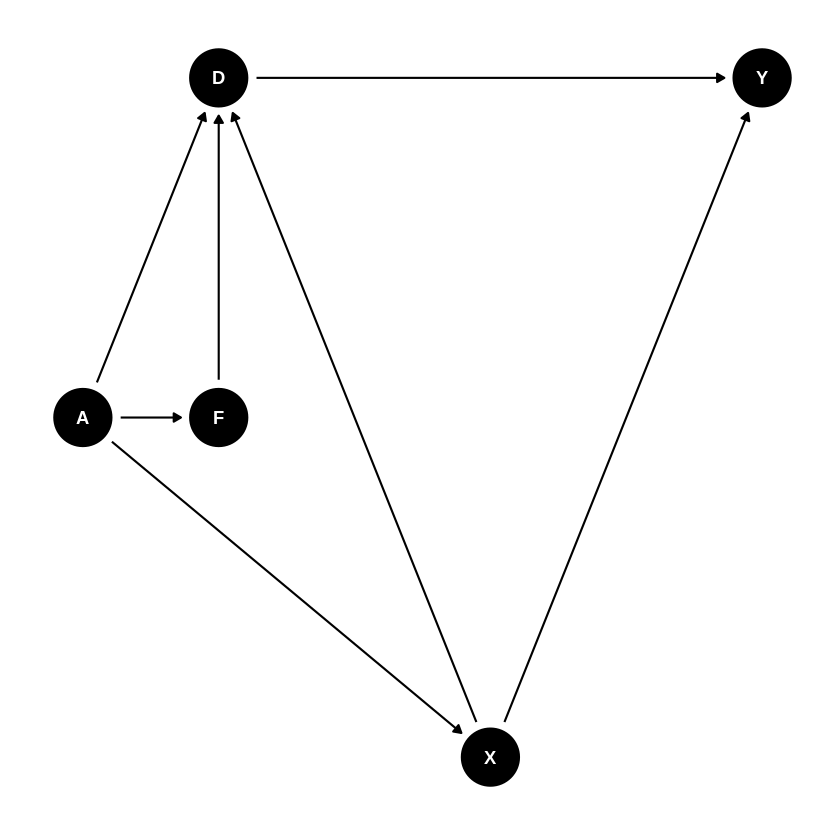

21.1. State one graph (where F determines X) and plot it#

#generate a DAGs and plot them

G1 = dagitty('dag{

Y [outcome,pos="4, 0"]

D [exposure,pos="0, 0"]

X [confounder, pos="2,-2"]

F [uobserved, pos="0, -1"]

D -> Y

X -> D

F -> X

F -> D

X -> Y}')

ggdag(G1)+ theme_dag()

List minimal adjustment sets to identify causal effecs \(D \to Y\)

adjustmentSets( G1, "D", "Y",effect="total" )

{ X }

What is the underlying principle?

Here condition on X blocks backdoor paths from Y to D (Pearl). Dagitty correctly finds X (and does many more correct decisions, when we consider more elaborate structures. Why do we want to consider more elaborate structures? The very empirical problem requires us to do so!

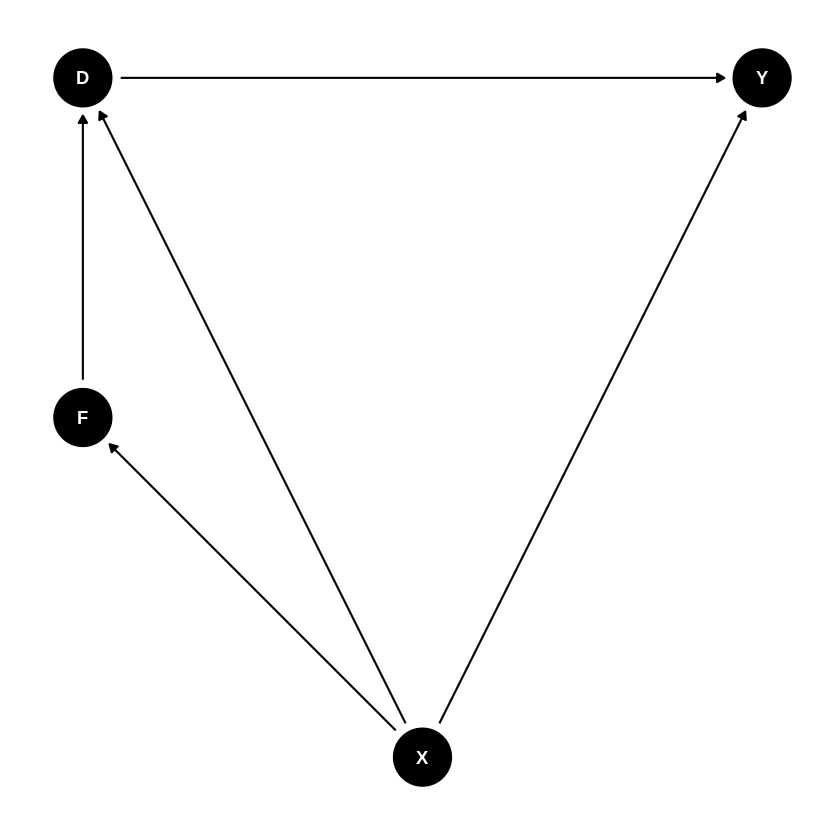

Another Graph (wherere \(X\) determine \(F\)):

#generate a couple of DAGs and plot them

G2 = dagitty('dag{

Y [outcome,pos="4, 0"]

D [exposure,pos="0, 0"]

X [confounder, pos="2,-2"]

F [uobserved, pos="0, -1"]

D -> Y

X -> D

X -> F

F -> D

X -> Y}')

ggdag(G2)+ theme_dag()

adjustmentSets( G2, "D", "Y", effect="total" )

{ X }

One more graph (encompassing previous ones), where (F, X) are jointly determined by latent factors \(A\). We can allow in fact the whole triple (D, F, X) to be jointly determined by latent factors \(A\).

This is much more realistic graph to consider.

G3 = dagitty('dag{

Y [outcome,pos="4, 0"]

D [exposure,pos="0, 0"]

X [confounder, pos="2,-2"]

F [unobserved, pos="0, -1"]

A [unobserved, pos="-1, -1"]

D -> Y

X -> D

F -> D

A -> F

A -> X

A -> D

X -> Y}')

adjustmentSets( G3, "D", "Y", effect="total" )

ggdag(G3)+ theme_dag()

{ X }

Threat to Idenitification: What if \(F\) also directly affects \(Y\)? (Note that there are no valid adjustment sets in this case)

G4 = dagitty('dag{

Y [outcome,pos="4, 0"]

D [exposure,pos="0, 0"]

X [confounder, pos="2,-2"]

F [unobserved, pos="0, -1"]

A [unobserved, pos="-1, -1"]

D -> Y

X -> D

F -> D

A -> F

A -> X

A -> D

F -> Y

X -> Y}')

ggdag(G4)+ theme_dag()

adjustmentSets( G4, "D", "Y",effect="total" )

Note that no output means that there is no valid adustment set (among observed variables)

21.2. How can F affect Y directly? Is it reasonable?#

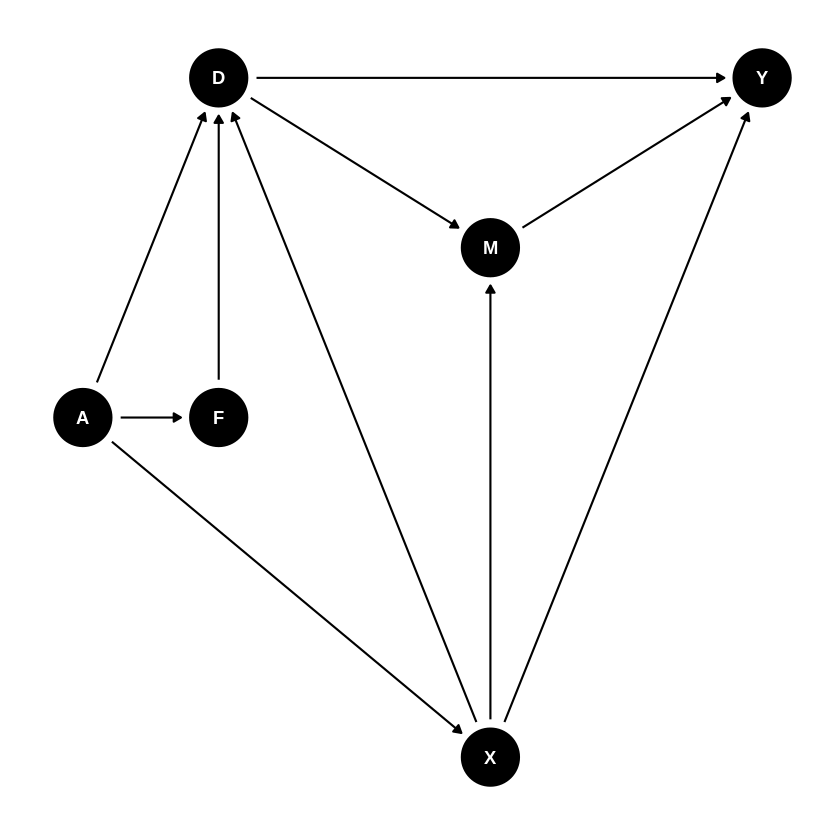

Introduce Match Amount \(M\) (very important mediator, why mediator?). \(M\) is not observed. Luckily adjusting for \(X\) still works if there is no \(F \to M\) arrow.

G5 = dagitty('dag{

Y [outcome,pos="4, 0"]

D [exposure,pos="0, 0"]

X [confounder, pos="2,-2"]

F [unobserved, pos="0, -1"]

A [unobserved, pos="-1, -1"]

M [unobserved, pos="2, -.5"]

D -> Y

X -> D

F -> D

A -> F

A -> X

A -> D

D -> M

M -> Y

X -> M

X -> Y}')

print( adjustmentSets( G5, "D", "Y",effect="total" ) )

ggdag(G5)+ theme_dag()

{ X }

If there is \(F \to M\) arrow, then adjusting for \(X\) is not sufficient.

G6 = dagitty('dag{

Y [outcome,pos="4, 0"]

D [exposure,pos="0, 0"]

X [confounder, pos="2,-2"]

F [unobserved, pos="0, -1"]

A [unobserved, pos="-1, -1"]

M [uobserved, pos="2, -.5"]

D -> Y

X -> D

F -> D

A -> F

A -> X

D -> M

F -> M

A -> D

M -> Y

X -> M

X -> Y}')

print( adjustmentSets( G6, "D", "Y" ),effect="total" )

ggdag(G6)+ theme_dag()